FLUX.1-Kontext-dev: Image Augmentation AI Model

AI model for augmenting images with text instructions

Black Forest Labs has released FLUX.1-Kontext-dev, an advanced image-to-image AI model that augments existing images using text instructions.

AI model for augmenting images with text instructions

Black Forest Labs has released FLUX.1-Kontext-dev, an advanced image-to-image AI model that augments existing images using text instructions.

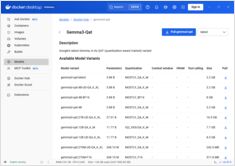

Enable GPU acceleration for Docker Model Runner with NVIDIA CUDA support

Docker Model Runner is Docker’s official tool for running AI models locally, but enabling NVidia GPU acceleration in Docker Model Runner requires specific configuration.

Cut LLM costs by 80% with smart token optimization

Token optimization is the critical skill separating cost-effective LLM applications from budget-draining experiments.

GPT-OSS 120b benchmarks on three AI platforms

I dug up some interesting performance tests of GPT-OSS 120b running on Ollama across three different platforms: NVIDIA DGX Spark, Mac Studio, and RTX 4080. The GPT-OSS 120b model from the Ollama library weighs in at 65GB, which means it doesn’t fit into the 16GB VRAM of an RTX 4080 (or the newer RTX 5080).

Build MCP servers for AI assistants with Python examples

The Model Context Protocol (MCP) is revolutionizing how AI assistants interact with external data sources and tools. In this guide, we’ll explore how to build MCP servers in Python, with examples focused on web search and scraping capabilities.

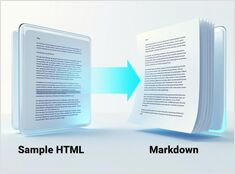

Python for converting HTML to clean, LLM-ready Markdown

Converting HTML to Markdown is a fundamental task in modern development workflows, particularly when preparing web content for Large Language Models (LLMs), documentation systems, or static site generators like Hugo.

Quick reference for Docker Model Runner commands

Docker Model Runner (DMR) is Docker’s official solution for running AI models locally, introduced in April 2025. This cheatsheet provides a quick reference for all essential commands, configurations, and best practices.

Compare Docker Model Runner and Ollama for local LLM

Running large language models (LLMs) locally has become increasingly popular for privacy, cost control, and offline capabilities. The landscape shifted significantly in April 2025 when Docker introduced Docker Model Runner (DMR), its official solution for AI model deployment.

Specialized chips are making AI inference faster, cheaper

The future of AI isn’t just about smarter models - it’s about smarter silicon. Specialized hardware for LLM inference is driving a revolution similar to Bitcoin mining’s shift to ASICs.

Availability, real-world retail pricing across six countries, and comparison against Mac Studio.

NVIDIA DGX Spark is real, on sale Oct 15, 2025, and targeted at CUDA developers needing local LLM work with an integrated NVIDIA AI stack. US MSRP $3,999; UK/DE/JP retail is higher due to VAT and channel. AUD/KRW public sticker prices are not yet widely posted.

Comparing Speed, parameters and performance of these two models

Here is a comparison between Qwen3:30b and GPT-OSS:20b focusing on instruction following and performance parameters, specs and speed.

+ Specific Examples Using Thinking LLMs

In this post, we’ll explore two ways to connect your Python application to Ollama: 1. Via HTTP REST API; 2. Via the official Ollama Python library.

Not very nice.

Ollama’s GPT-OSS models have recurring issues handling structured output, especially when used with frameworks like LangChain, OpenAI SDK, vllm, and others.

Slightly different APIs require special approach.

Here’s a side-by-side support comparison of structured output (getting reliable JSON back) across popular LLM providers, plus minimal Python examples

A couple of ways to get structured output from Ollama

Large Language Models (LLMs) are powerful, but in production we rarely want free-form paragraphs. Instead, we want predictable data: attributes, facts, or structured objects you can feed into an app. That’s LLM Structured Output.

My own test of ollama model scheduling

Here I am comparing how much VRAM new version of Ollama allocating for the model vs previous Ollama version. The new version is worse.