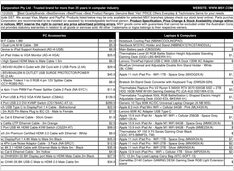

Comparing LLMs performance on Ollama on 16GB VRAM GPU

LLM speed test on RTX 4080 with 16GB VRAM

Running large language models locally gives you privacy, offline capability, and zero API costs. This benchmark reveals exactly what one can expect from 9 popular LLMs on Ollama on an RTX 4080.