Cross-Modal Embeddings: Bridging AI Modalities

Unify text, images, and audio in shared embedding spaces

Cross-modal embeddings represent a breakthrough in artificial intelligence, enabling understanding and reasoning across different data types within a unified representation space.

This technology powers modern multimodal applications from image search to content generation.

This image is from the article:

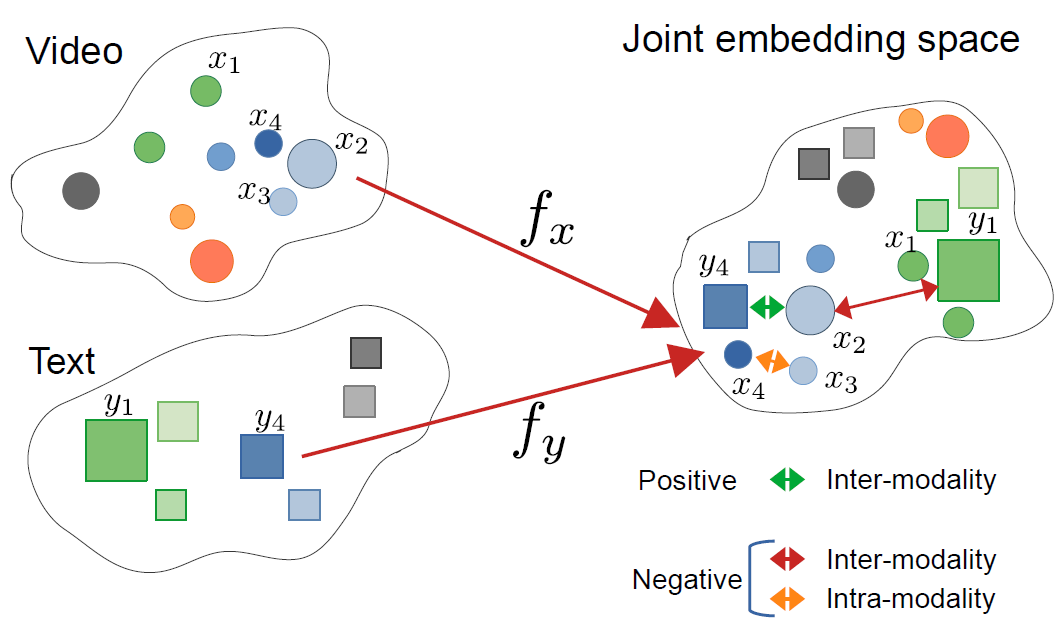

CrossCLR: cross-modal contrastive learning for multi-modal video representations, by Mohammadreza Zolfaghari and others

This image is from the article:

CrossCLR: cross-modal contrastive learning for multi-modal video representations, by Mohammadreza Zolfaghari and others

Understanding Cross-Modal Embeddings

Cross-modal embeddings are vector representations that encode information from different modalities—such as text, images, audio, and video - into a shared embedding space. Unlike traditional single-modality embeddings, cross-modal approaches learn a unified representation where semantically similar concepts cluster together regardless of their original format.

What Are Cross-Modal Embeddings?

At their core, cross-modal embeddings solve a critical challenge in AI: how to compare and relate information across different data types. A traditional image classifier can only work with images, while a text model handles only text. Cross-modal embeddings bridge this gap by projecting different modalities into a common vector space where:

- An image of a cat and the word “cat” have similar embedding vectors

- Semantic relationships are preserved across modalities

- Distance metrics (cosine similarity, Euclidean distance) measure cross-modal similarity

This unified representation enables powerful capabilities like searching images using text queries, generating captions from images, or even zero-shot classification without task-specific training.

The Architecture Behind Cross-Modal Learning

Modern cross-modal systems typically employ dual-encoder architectures with contrastive learning objectives:

Dual Encoders: Separate neural networks encode each modality. For example, CLIP uses:

- A vision transformer (ViT) or ResNet for images

- A transformer-based text encoder for language

Contrastive Learning: The model learns by maximizing similarity between matching pairs (e.g., image and its caption) while minimizing similarity between non-matching pairs. The contrastive loss function can be expressed as:

[ \mathcal{L} = -\log \frac{\exp(\text{sim}(v_i, t_i) / \tau)}{\sum_{j=1}^{N} \exp(\text{sim}(v_i, t_j) / \tau)} ]

where (v_i) is the image embedding, (t_i) is the text embedding, (\text{sim}) is similarity (typically cosine), and (\tau) is a temperature parameter.

Key Technologies and Models

CLIP: Pioneering Vision-Language Understanding

OpenAI’s CLIP (Contrastive Language-Image Pre-training) revolutionized the field by training on 400 million image-text pairs from the internet. The model architecture consists of:

import torch

from transformers import CLIPProcessor, CLIPModel

from PIL import Image

# Load pre-trained CLIP model

model = CLIPModel.from_pretrained("openai/clip-vit-base-patch32")

processor = CLIPProcessor.from_pretrained("openai/clip-vit-base-patch32")

# Prepare inputs

image = Image.open("example.jpg")

texts = ["a photo of a cat", "a photo of a dog", "a photo of a bird"]

# Process and get embeddings

inputs = processor(text=texts, images=image, return_tensors="pt", padding=True)

# Get cross-modal similarity scores

outputs = model(**inputs)

logits_per_image = outputs.logits_per_image

probs = logits_per_image.softmax(dim=1)

print(f"Probabilities: {probs}")

CLIP’s key innovation was scale and simplicity. By training on massive web-scale data without manual annotations, it achieved remarkable zero-shot transfer capabilities. How does CLIP differ from traditional vision models? Unlike supervised classifiers trained on fixed label sets, CLIP learns from natural language supervision, making it adaptable to any visual concept describable in text.

ImageBind: Extending to Six Modalities

Meta’s ImageBind extends cross-modal embeddings beyond vision and language to include:

- Audio (environmental sounds, speech)

- Depth information

- Thermal imaging

- IMU (motion sensor) data

This creates a truly multimodal embedding space where all six modalities are aligned. The key insight is that images serve as a “binding” modality—by pairing images with other modalities and leveraging existing vision-language alignment, ImageBind creates a unified space without requiring all possible modality pairs during training.

Open-Source Alternatives

The ecosystem has expanded with several open-source implementations:

OpenCLIP: A community implementation offering larger models and diverse training recipes:

import open_clip

model, _, preprocess = open_clip.create_model_and_transforms(

'ViT-L-14',

pretrained='laion2b_s32b_b82k'

)

tokenizer = open_clip.get_tokenizer('ViT-L-14')

LAION-5B Models: Trained on the massive open LAION-5B dataset, providing alternatives to proprietary models with comparable or better performance.

For developers interested in state-of-the-art open-source text embedding solutions, the Qwen3 Embedding & Reranker Models on Ollama offer excellent multilingual performance with easy local deployment.

Implementation Strategies

Building a Cross-Modal Search System

A practical implementation of cross-modal embeddings for semantic search involves several components. What are the main applications of cross-modal embeddings? They power use cases from e-commerce product search to content moderation and creative tools.

import numpy as np

from typing import List, Tuple

import faiss

from transformers import CLIPModel, CLIPProcessor

class CrossModalSearchEngine:

def __init__(self, model_name: str = "openai/clip-vit-base-patch32"):

self.model = CLIPModel.from_pretrained(model_name)

self.processor = CLIPProcessor.from_pretrained(model_name)

self.image_index = None

self.image_metadata = []

def encode_images(self, images: List) -> np.ndarray:

"""Encode images into embeddings"""

inputs = self.processor(images=images, return_tensors="pt", padding=True)

with torch.no_grad():

embeddings = self.model.get_image_features(**inputs)

return embeddings.cpu().numpy()

def encode_text(self, texts: List[str]) -> np.ndarray:

"""Encode text queries into embeddings"""

inputs = self.processor(text=texts, return_tensors="pt", padding=True)

with torch.no_grad():

embeddings = self.model.get_text_features(**inputs)

return embeddings.cpu().numpy()

def build_index(self, image_embeddings: np.ndarray):

"""Build FAISS index for efficient similarity search"""

dimension = image_embeddings.shape[1]

# Normalize embeddings for cosine similarity

faiss.normalize_L2(image_embeddings)

# Create index (using HNSW for large-scale deployment)

self.image_index = faiss.IndexHNSWFlat(dimension, 32)

self.image_index.hnsw.efConstruction = 40

self.image_index.add(image_embeddings)

def search(self, query: str, k: int = 10) -> List[Tuple[int, float]]:

"""Search for images using text query"""

query_embedding = self.encode_text([query])

faiss.normalize_L2(query_embedding)

distances, indices = self.image_index.search(query_embedding, k)

return list(zip(indices[0], distances[0]))

# Usage example

engine = CrossModalSearchEngine()

# Build index from image collection

image_embeddings = engine.encode_images(image_collection)

engine.build_index(image_embeddings)

# Search with text

results = engine.search("sunset over mountains", k=5)

Fine-Tuning for Domain-Specific Tasks

While pre-trained models work well for general purposes, domain-specific applications benefit from fine-tuning:

from transformers import CLIPModel, CLIPProcessor, AdamW

import torch.nn as nn

class FineTuneCLIP:

def __init__(self, model_name: str, num_epochs: int = 10):

self.model = CLIPModel.from_pretrained(model_name)

self.processor = CLIPProcessor.from_pretrained(model_name)

self.num_epochs = num_epochs

def contrastive_loss(self, image_embeddings, text_embeddings, temperature=0.07):

"""Compute InfoNCE contrastive loss"""

# Normalize embeddings

image_embeddings = nn.functional.normalize(image_embeddings, dim=1)

text_embeddings = nn.functional.normalize(text_embeddings, dim=1)

# Compute similarity matrix

logits = torch.matmul(image_embeddings, text_embeddings.T) / temperature

# Labels are diagonal (matching pairs)

labels = torch.arange(len(logits), device=logits.device)

# Symmetric loss

loss_i = nn.functional.cross_entropy(logits, labels)

loss_t = nn.functional.cross_entropy(logits.T, labels)

return (loss_i + loss_t) / 2

def train(self, dataloader, learning_rate=5e-6):

"""Fine-tune on domain-specific data"""

optimizer = AdamW(self.model.parameters(), lr=learning_rate)

self.model.train()

for epoch in range(self.num_epochs):

total_loss = 0

for batch in dataloader:

images, texts = batch['images'], batch['texts']

# Process inputs

inputs = self.processor(

text=texts,

images=images,

return_tensors="pt",

padding=True

)

# Forward pass

outputs = self.model(**inputs, return_loss=True)

loss = outputs.loss

# Backward pass

optimizer.zero_grad()

loss.backward()

optimizer.step()

total_loss += loss.item()

avg_loss = total_loss / len(dataloader)

print(f"Epoch {epoch+1}/{self.num_epochs}, Loss: {avg_loss:.4f}")

Production Deployment Considerations

Optimizing Inference Performance

How can I optimize cross-modal embeddings for production? Performance optimization is critical for real-world deployment:

Model Quantization: Reduce model size and increase inference speed:

import torch

from torch.quantization import quantize_dynamic

# Dynamic quantization for CPU inference

quantized_model = quantize_dynamic(

model,

{torch.nn.Linear},

dtype=torch.qint8

)

ONNX Conversion: Export to ONNX for optimized inference:

import torch.onnx

dummy_input = processor(text=["sample"], return_tensors="pt")

torch.onnx.export(

model,

tuple(dummy_input.values()),

"clip_model.onnx",

input_names=['input_ids', 'attention_mask'],

output_names=['output'],

dynamic_axes={

'input_ids': {0: 'batch_size'},

'attention_mask': {0: 'batch_size'}

}

)

Batch Processing: Maximize GPU utilization through batching:

def batch_encode(items: List, batch_size: int = 32):

"""Process items in batches for efficiency"""

embeddings = []

for i in range(0, len(items), batch_size):

batch = items[i:i+batch_size]

batch_embeddings = encode_batch(batch)

embeddings.append(batch_embeddings)

return np.concatenate(embeddings, axis=0)

Scalable Vector Storage

For large-scale applications, vector databases are essential. Which frameworks support cross-modal embedding implementations? Beyond the models themselves, infrastructure matters:

FAISS (Facebook AI Similarity Search): Efficient similarity search library

- Supports billions of vectors

- Multiple index types (flat, IVF, HNSW)

- GPU acceleration available

Milvus: Open-source vector database

from pymilvus import connections, Collection, FieldSchema, CollectionSchema, DataType

# Define schema

fields = [

FieldSchema(name="id", dtype=DataType.INT64, is_primary=True, auto_id=True),

FieldSchema(name="embedding", dtype=DataType.FLOAT_VECTOR, dim=512),

FieldSchema(name="metadata", dtype=DataType.JSON)

]

schema = CollectionSchema(fields, description="Image embeddings")

# Create collection

collection = Collection("images", schema)

# Create index

index_params = {

"metric_type": "IP", # Inner product (cosine similarity)

"index_type": "IVF_FLAT",

"params": {"nlist": 1024}

}

collection.create_index("embedding", index_params)

Pinecone/Weaviate: Managed vector database services offering easy scaling and maintenance.

Advanced Use Cases and Applications

Zero-Shot Classification

Cross-modal embeddings enable classification without task-specific training. This capability extends beyond traditional computer vision approaches—for instance, if you’re interested in more specialized object detection with TensorFlow, that represents a complementary supervised learning approach for specific detection tasks.

def zero_shot_classify(image, candidate_labels: List[str], model, processor):

"""Classify image into arbitrary categories"""

# Create text prompts

text_inputs = [f"a photo of a {label}" for label in candidate_labels]

# Get embeddings

inputs = processor(

text=text_inputs,

images=image,

return_tensors="pt",

padding=True

)

outputs = model(**inputs)

# Compute probabilities

logits = outputs.logits_per_image

probs = logits.softmax(dim=1)

# Return ranked predictions

sorted_indices = probs.argsort(descending=True)[0]

return [(candidate_labels[idx], probs[0][idx].item()) for idx in sorted_indices]

# Example usage

labels = ["cat", "dog", "bird", "fish", "horse"]

predictions = zero_shot_classify(image, labels, model, processor)

print(f"Top prediction: {predictions[0]}")

Multimodal RAG (Retrieval-Augmented Generation)

Combining cross-modal embeddings with language models creates powerful multimodal RAG systems. Once you’ve retrieved relevant documents, reranking with embedding models can significantly improve the quality of results by reordering retrieved candidates based on relevance:

class MultimodalRAG:

def __init__(self, clip_model, llm_model):

self.clip = clip_model

self.llm = llm_model

self.knowledge_base = []

def add_documents(self, images, texts, metadata):

"""Add multimodal documents to knowledge base"""

image_embeds = self.clip.encode_images(images)

text_embeds = self.clip.encode_text(texts)

# Store combined information

for i, (img_emb, txt_emb, meta) in enumerate(

zip(image_embeds, text_embeds, metadata)

):

self.knowledge_base.append({

'image_embedding': img_emb,

'text_embedding': txt_emb,

'metadata': meta,

'index': i

})

def retrieve(self, query: str, k: int = 5):

"""Retrieve relevant multimodal content"""

query_embedding = self.clip.encode_text([query])[0]

# Compute similarities

similarities = []

for doc in self.knowledge_base:

# Average similarity across modalities

img_sim = np.dot(query_embedding, doc['image_embedding'])

txt_sim = np.dot(query_embedding, doc['text_embedding'])

combined_sim = (img_sim + txt_sim) / 2

similarities.append((combined_sim, doc))

# Return top-k

similarities.sort(reverse=True)

return [doc for _, doc in similarities[:k]]

def answer_query(self, query: str):

"""Answer query using retrieved multimodal context"""

retrieved_docs = self.retrieve(query)

# Construct context from retrieved documents

context = "\n".join([doc['metadata']['text'] for doc in retrieved_docs])

# Generate answer with LLM

prompt = f"Context:\n{context}\n\nQuestion: {query}\n\nAnswer:"

answer = self.llm.generate(prompt)

return answer, retrieved_docs

If you’re implementing production RAG systems in Go, you might find this guide on reranking text documents with Ollama and Qwen3 Embedding model in Go particularly useful for optimizing retrieval quality.

Content Moderation and Safety

Cross-modal embeddings excel at detecting inappropriate content across modalities:

class ContentModerator:

def __init__(self, model, processor):

self.model = model

self.processor = processor

# Define safety categories

self.unsafe_categories = [

"violent content",

"adult content",

"hateful imagery",

"graphic violence",

"explicit material"

]

self.safe_categories = [

"safe for work",

"family friendly",

"educational content"

]

def moderate_image(self, image, threshold: float = 0.3):

"""Check if image contains unsafe content"""

# Combine all categories

all_categories = self.unsafe_categories + self.safe_categories

text_inputs = [f"image containing {cat}" for cat in all_categories]

# Get predictions

inputs = self.processor(

text=text_inputs,

images=image,

return_tensors="pt"

)

outputs = self.model(**inputs)

probs = outputs.logits_per_image.softmax(dim=1)[0]

# Check unsafe categories

unsafe_scores = probs[:len(self.unsafe_categories)]

max_unsafe_score = unsafe_scores.max().item()

return {

'is_safe': max_unsafe_score < threshold,

'confidence': 1 - max_unsafe_score,

'flagged_categories': [

self.unsafe_categories[i]

for i, score in enumerate(unsafe_scores)

if score > threshold

]

}

Best Practices and Common Pitfalls

Data Preprocessing

Proper preprocessing is crucial for optimal performance:

from torchvision import transforms

# Standard CLIP preprocessing

clip_transform = transforms.Compose([

transforms.Resize(224, interpolation=transforms.InterpolationMode.BICUBIC),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize(

mean=[0.48145466, 0.4578275, 0.40821073],

std=[0.26862954, 0.26130258, 0.27577711]

)

])

Handling Bias and Fairness

Cross-modal models can inherit biases from training data:

Mitigation strategies:

- Evaluate on diverse demographic groups

- Use debiasing techniques during fine-tuning

- Implement fairness-aware retrieval

- Regular auditing and monitoring in production

Embedding Quality Assessment

Monitor embedding quality in production:

def assess_embedding_quality(embeddings: np.ndarray):

"""Compute metrics for embedding quality"""

# Compute average pairwise distance

distances = np.linalg.norm(

embeddings[:, None] - embeddings[None, :],

axis=2

)

avg_distance = distances.mean()

# Check for clustering (low intra-cluster distance)

from sklearn.cluster import KMeans

kmeans = KMeans(n_clusters=10)

labels = kmeans.fit_predict(embeddings)

# Compute silhouette score

from sklearn.metrics import silhouette_score

score = silhouette_score(embeddings, labels)

return {

'avg_pairwise_distance': avg_distance,

'silhouette_score': score

}

Docker Deployment Example

Package your cross-modal application for easy deployment:

FROM nvidia/cuda:11.8.0-cudnn8-runtime-ubuntu22.04

# Install Python and dependencies

RUN apt-get update && apt-get install -y python3-pip

WORKDIR /app

# Install requirements

COPY requirements.txt .

RUN pip3 install --no-cache-dir -r requirements.txt

# Copy application code

COPY . .

# Expose API port

EXPOSE 8000

# Run API server

CMD ["uvicorn", "api:app", "--host", "0.0.0.0", "--port", "8000"]

# api.py - FastAPI server for cross-modal embeddings

from fastapi import FastAPI, File, UploadFile

from pydantic import BaseModel

import torch

from PIL import Image

import io

app = FastAPI()

# Load model at startup

@app.on_event("startup")

async def load_model():

global model, processor

model = CLIPModel.from_pretrained("openai/clip-vit-base-patch32")

processor = CLIPProcessor.from_pretrained("openai/clip-vit-base-patch32")

if torch.cuda.is_available():

model = model.cuda()

class TextQuery(BaseModel):

text: str

@app.post("/embed/text")

async def embed_text(query: TextQuery):

inputs = processor(text=[query.text], return_tensors="pt", padding=True)

if torch.cuda.is_available():

inputs = {k: v.cuda() for k, v in inputs.items()}

with torch.no_grad():

embeddings = model.get_text_features(**inputs)

return {"embedding": embeddings.cpu().numpy().tolist()}

@app.post("/embed/image")

async def embed_image(file: UploadFile = File(...)):

image = Image.open(io.BytesIO(await file.read()))

inputs = processor(images=image, return_tensors="pt")

if torch.cuda.is_available():

inputs = {k: v.cuda() for k, v in inputs.items()}

with torch.no_grad():

embeddings = model.get_image_features(**inputs)

return {"embedding": embeddings.cpu().numpy().tolist()}

@app.post("/similarity")

async def compute_similarity(

text: TextQuery,

file: UploadFile = File(...)

):

# Get both embeddings

image = Image.open(io.BytesIO(await file.read()))

inputs = processor(text=[text.text], images=image, return_tensors="pt", padding=True)

if torch.cuda.is_available():

inputs = {k: v.cuda() for k, v in inputs.items()}

outputs = model(**inputs)

similarity = outputs.logits_per_image[0][0].item()

return {"similarity": similarity}

Future Directions

The field of cross-modal embeddings continues to evolve rapidly:

Larger Modality Coverage: Future models will likely incorporate additional modalities like touch (haptic feedback), smell, and taste data for truly comprehensive multimodal understanding.

Improved Efficiency: Research into distillation and efficient architectures will make powerful cross-modal models accessible on edge devices.

Better Alignment: Advanced techniques for aligning modalities more precisely, including cycle consistency losses and adversarial training.

Compositional Understanding: Moving beyond simple object recognition to understanding complex relationships and compositions across modalities.

Temporal Modeling: Better handling of video and time-series data with explicit temporal reasoning in embedding spaces.

Useful Links

- OpenAI CLIP Repository

- OpenCLIP: Open Source Implementation

- Hugging Face Transformers Documentation

- Meta ImageBind

- LAION-5B Dataset

- FAISS Documentation

- Milvus Vector Database

- Pinecone Vector Database

- Paper: Learning Transferable Visual Models From Natural Language Supervision

- Paper: ImageBind: One Embedding Space To Bind Them All

- Concrete Reinforcement Bar Caps object detection with tensorflow

- Reranking with embedding models

- Reranking text documents with Ollama and Qwen3 Embedding model - in Go

- Qwen3 Embedding & Reranker Models on Ollama: State-of-the-Art Performance

Cross-modal embeddings represent a paradigm shift in how AI systems process and understand information. By breaking down the barriers between different data types, these techniques enable more natural and capable AI applications. Whether you’re building search systems, content moderation tools, or creative applications, mastering cross-modal embeddings opens up a world of possibilities for innovation in multimodal AI.