Chat UIs for Local Ollama Instances

Quick overview of most prominent UIs for Ollama in 2025

Locally hosted Ollama allows to run large language models on your own machine, but using it via command-line isn’t user-friendly. Here are several open-source projects provide ChatGPT-style interfaces that connect to a local Ollama.

These UIs support conversational chat, often with features like document upload for retrieval-augmented generation (RAG), and run as web or desktop applications. Below is a comparison of key options, followed by detailed sections on each. For a broader view of how local Ollama fits with vLLM, Docker Model Runner, LocalAI and cloud providers—including cost and infrastructure trade-offs—see LLM Hosting: Local, Self-Hosted & Cloud Infrastructure Compared.

Comparison of Ollama-Compatible UIs

| UI Tool | Platform | Document Support | Ollama Integration | Strengths | Limitations |

|---|---|---|---|---|---|

| Page Assist | Browser extension (Chrome, Firefox) | Yes – add files for analysis | Connects to local Ollama via extension config | In-browser chat; easy model management and web page context integration. | Browser-only; requires installing/configuring an extension. |

| Open WebUI | Web app (self-hosted; Docker/PWA) | Yes – built-in RAG (upload docs or add to library) | Direct Ollama API support or bundled server (configure base URL) | Feature-rich (multi-LLM, offline, image generation); mobile-friendly (PWA). | Setup is heavier (Docker/K8s); broad scope can be overkill for simple use. |

| LobeChat | Web app (self-hosted; PWA support) | Yes – “Knowledge Base” with file upload (PDF, images, etc.) | Supports Ollama as one of multiple AI backends (requires enabling Ollama’s API access) | Sleek ChatGPT-like UI; voice chat, plugins, and multi-model support. | Complex feature set; requires environment setup (e.g. cross-origin for Ollama). |

| LibreChat | Web app (self-hosted; multi-user) | Yes – “Chat with Files” using RAG (via embeddings) | Compatible with Ollama and many other providers (switchable per chat) | Familiar ChatGPT-style interface; rich features (agents, code interpreter, etc.). | Installation/configuration can be involved; large project may be more than needed for basic use. |

| AnythingLLM | Desktop app (Windows, Mac, Linux) or web (Docker) | Yes – built-in RAG: drag-and-drop documents (PDF, DOCX, etc.) with citations | Ollama supported as an LLM provider (set in config or Docker env) | All-in-one UI (private ChatGPT with your docs); no-code agent builder, multi-user support. | Higher resource usage (embeddings DB, etc.); desktop app lacks some multi-user features. |

| Chat-with-Notes | Web app (lightweight Flask server) | Yes – upload text/PDF files and chat with their content | Uses Ollama for all AI answers (requires Ollama running locally) | Very simple setup and interface focused on document Q&A; data stays local. | Basic UI and functionality; single-user, one document at a time (no advanced features). |

Each of these tools is actively maintained and open-source. Next, we dive into details of each option, including how they work with Ollama, notable features, and trade-offs.

Page Assist (Browser Extension)

Page Assist is an open-source browser extension that brings local LLM chat to your browser. It supports Chromium-based browsers and Firefox, offering a ChatGPT-like sidebar or tab where you can converse with a model. Page Assist can connect to locally running Ollama as the AI provider, or other local backends, through its settings. Notably, it lets you add files (e.g. PDFs or text) for the AI to analyze within the chat, enabling basic RAG workflows. You can even have it assist with the content of the current webpage or perform web searches for information.

Setup is straightforward: install the extension from the Chrome Web Store or Firefox Add-ons, ensure Ollama is running, and select Ollama as the local AI provider in Page Assist’s settings. The interface includes features like chat history, model selection, and an optional shareable URL for your chat results. A web UI is also available via a keyboard shortcut if you prefer a full-tab chat experience.

Strengths: Page Assist is lightweight and convenient – since it lives in the browser, there’s no separate server to run. It’s great for browsing contexts (you can open it on any webpage) and supports internet search integration and file attachments to enrich the conversation. It also provides handy features like keyboard shortcuts for new chat and toggling the sidebar.

Limitations: Being an extension, it is restricted to a browser environment. The UI is simpler and somewhat less feature-rich than full standalone chat apps. For example, multi-user management or advanced agent plugins are not in scope. Also, initial setup may require building/loading the extension if a pre-packaged version isn’t available for your browser (the project provides build instructions using Bun or npm). Overall, Page Assist is best for individual use when you want quick access to Ollama-powered chat while web browsing, with moderate RAG capabilities.

Open WebUI (Self-Hosted Web Application)

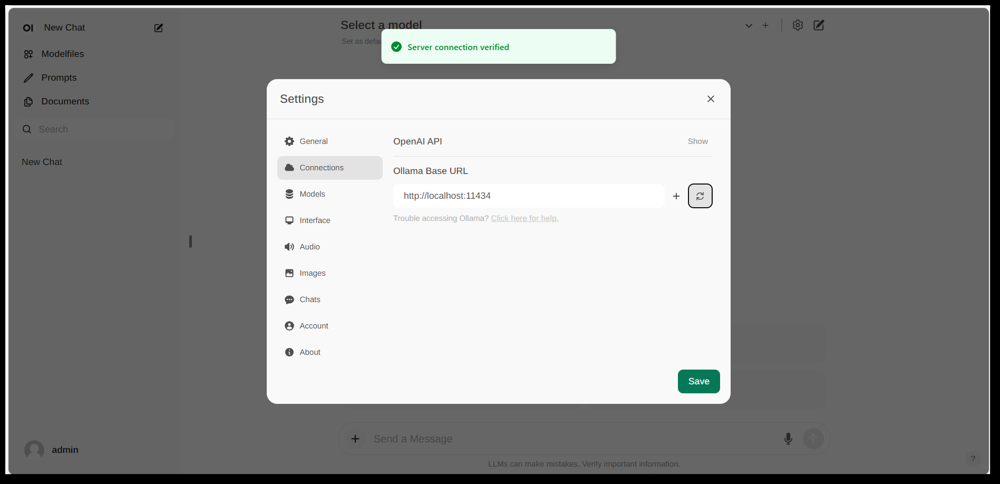

Open WebUI’s settings interface, showing an Ollama connection configured (base URL set to the local Ollama API). The UI includes a Documents section, enabling RAG workflows.

Open WebUI is a powerful, general-purpose chat front-end originally created to work with Ollama, and now expanded to support various LLM backends. It runs as a self-hosted web application and is typically deployed via Docker or Kubernetes for ease of setup. Once running, you access it through your browser (with support for installing it as a Progressive Web App on mobile devices).

Open WebUI offers a full chat interface with multi-user support, model management, and extensive features. Critically, it has built-in RAG capabilities – you can upload or import documents into a document library and then ask questions with retrieval augmentation. The interface allows loading documents directly into a chat session or maintaining a persistent library of knowledge. It even supports performing web searches and injecting results into the conversation for up-to-date information.

Ollama integration: Open WebUI connects to Ollama through its API. You can either run the Open WebUI Docker container alongside an Ollama server and set an environment variable to point to Ollama’s URL, or use a special Docker image that bundles Ollama with the web UI. In practice, after launching the containers, you’ll visit the Open WebUI in your browser and see the “Server connection verified” if configured correctly (as shown in the image above). This means the UI is ready to use your local Ollama models for chat. Open WebUI also supports OpenAI API-compatible endpoints, so it can interface with LM Studio, OpenRouter, etc., in addition to Ollama.

Strengths: This solution is one of the most feature-rich and flexible UIs. It supports multiple simultaneous models and conversation threads, custom “characters” or system prompts, image generation, and more. The RAG implementation is robust – you get a full UI for managing documents and even configuring which vector store or search service to use for retrieval. Open WebUI is also actively developed (with an extensive community, as indicated by its high star count on GitHub) and designed for extensibility and scaling. It’s a good choice if you want a comprehensive, all-in-one chat UI for local models, especially in a scenario with multiple users or complex use-cases.

Limitations: With great power comes greater complexity. Open WebUI can be overkill for simple personal use – deploying Docker containers and managing the configuration might be intimidating if you’re not familiar. It consumes more resources than a lightweight app, since it runs a web server, optional database for chat history, etc. Also, features like role-based access control and user management, while useful, indicate it’s geared toward a server setup – a single user at a home PC might not need all that. In short, the setup is heavier and the interface may feel complex if you just need a basic ChatGPT clone. But for those who do need its breadth of features (or want to easily swap between Ollama and other model providers in one UI), Open WebUI is a top contender.

LobeChat (ChatGPT-Like Framework with Plugins)

LobeChat interface banner showing “Ollama Supported” and multiple local models. LobeChat lets you deploy a slick ChatGPT-style web app using Ollama or other providers, with features like voice input and plugins.

LobeChat is an open-source chat framework that emphasizes a polished user experience and flexibility. It’s essentially a ChatGPT-like web application you can self-host, with support for multiple AI providers – from OpenAI and Anthropic to open models via Ollama. LobeChat is designed with privacy in mind (you run it yourself) and has a modern interface that includes conveniences like conversation memory, voice conversation mode, and even text-to-image generation through plugins.

One of LobeChat’s key features is its Knowledge Base capability. You can upload documents (in formats such as PDFs, images, audio, video) and create a knowledge base that can be drawn upon during chat. This means you can ask questions about the content of your files – a RAG workflow that LobeChat supports out-of-the-box. The UI provides management of these files/knowledge bases and allows toggling their use in the conversation, giving a richer Q&A experience beyond the base LLM.

To use LobeChat with Ollama, you’ll deploy the LobeChat app (for example, via a provided Docker image or script) and configure Ollama as the backend. LobeChat recognizes Ollama as a first-class provider – it even offers a one-click deployment script via the Pinokio AI browser if you use that. In practice, you may need to adjust Ollama’s settings (like enabling CORS as per LobeChat’s docs) so that LobeChat’s web frontend can access the Ollama HTTP API. Once configured, you can choose an Ollama-hosted model in the LobeChat UI and converse with it, including querying your uploaded documents.

Strengths: LobeChat’s UI is often praised for being clean and user-friendly, closely mimicking ChatGPT’s look and feel (which can ease adoption). It adds value with extras like voice input/output for spoken conversations and a plugin system to extend functionality (similar to ChatGPT’s plugins, enabling things like web browsing or image generation). Multi-model support means you can easily switch between, say, an Ollama local model and an OpenAI API model in the same interface. It also supports installation as a mobile-friendly PWA, so you can access your local chat on the go.

Limitations: Setting up LobeChat can be more complex than some alternatives. It’s a full-stack application (often run with Docker Compose), so there’s some overhead. In particular, configuring Ollama integration requires enabling cross-origin requests on Ollama’s side and ensuring ports align – a one-time task, but technical. Additionally, while LobeChat is quite powerful, not all features may be needed for every user; for example, if you don’t need multi-provider support or plugins, the interface might feel cluttered compared to a minimal tool. Lastly, certain advanced features like the one-click deploy assume specific environments (Pinokio browser or Vercel), which you may or may not use. Overall, LobeChat is ideal if you want a full-featured ChatGPT alternative that runs locally with Ollama, and you don’t mind a bit of initial configuration to get there.

LibreChat (ChatGPT Clone with Multi-Provider Support)

LibreChat (formerly known as ChatGPT-Clone or UI) is an open-source project aiming to replicate and extend the ChatGPT interface and functionality. It can be deployed locally (or on your own server) and supports a variety of AI backends – including open-source models via Ollama. Essentially, LibreChat provides the familiar chat experience (dialogue interface with history, user and assistant messages) while letting you plug in different model providers on the backend.

LibreChat supports document interaction and RAG through an add-on called the RAG API and embedding services. In the interface, you can use features like “Chat with Files”, which allows you to upload documents and then ask questions about them. Under the hood, this uses embeddings and a vector store to fetch relevant context from your files. This means you can achieve a similar effect to ChatGPT + custom knowledge, all locally. The project even provides a separate repo for the RAG service if you want to self-host that.

Using LibreChat with Ollama typically involves running the LibreChat server (for example via Node/Docker) and ensuring it can reach the Ollama service. LibreChat has a “Custom Endpoint” setting where you can input an OpenAI-compatible API URL. Since Ollama can expose a local API that is OpenAI-compatible, LibreChat can be pointed to http://localhost:11434 (or wherever Ollama is listening). In fact, LibreChat explicitly lists Ollama among its supported AI providers – alongside others like OpenAI, Cohere, etc. Once configured, you can select the model (Ollama’s model) in a dropdown and chat away. LibreChat also allows switching models or providers even mid-conversation and supports multiple chat presets/contexts.

Strengths: The primary advantage of LibreChat is the rich set of features built around the chat experience. It includes things like conversation branching, message search, built-in Code Interpreter support (safe sandboxed code execution), and tool/agent integrations. It’s essentially ChatGPT++, with the ability to integrate local models. For someone who likes ChatGPT’s UI, LibreChat will feel very familiar and requires little learning curve. The project is active and community-driven (as evidenced by its frequent updates and discussions), and it’s quite flexible: you can connect to many kinds of LLM endpoints or even run it multi-user with authentication for a team setting.

Limitations: With its many features, LibreChat can be heavier to run. The installation might involve setting up a database for storing chats and configuring environment variables for various APIs. If you enable all components (RAG, agents, image generation, etc.), it’s a fairly complex stack. For a single user who only needs a basic chat with one local model, LibreChat could be more than needed. Additionally, the UI, while familiar, is not highly specialized for document QA – it does the job but lacks a dedicated “document library” interface (uploads are typically done within a chat or via API). In short, LibreChat shines when you want a full ChatGPT-like environment with a range of features running locally, but simpler solutions might suffice for narrowly focused use-cases.

AnythingLLM (All-in-One Desktop or Server App)

AnythingLLM is an all-in-one AI application that emphasizes RAG and ease of use. It allows you to “chat with your docs” using either open-source LLMs or even OpenAI’s models, all through a single unified interface. Notably, AnythingLLM is available both as a cross-platform desktop app (for Windows, Mac, Linux) and as a self-hosted web server (via Docker). This flexibility means you can run it like a normal application on your PC, or deploy it for multiple users on a server.

Document handling is at the core of AnythingLLM. You can drag-and-drop documents (PDF, TXT, DOCX, etc.) into the app, and it will automatically index them into a vector database (it comes with LanceDB by default). In the chat interface, when you ask questions, it will retrieve relevant chunks from your documents and provide cited answers, so you know which file and section the information came from. Essentially, it builds a private knowledge base for you and lets the LLM use it as context. You can organize documents into “workspaces” (for example, one workspace per project or topic), isolating contexts as needed.

Using Ollama with AnythingLLM is straightforward. In the configuration, you choose Ollama as the LLM provider. If running via Docker, you set environment variables like LLM_PROVIDER=ollama and provide the OLLAMA_BASE_PATH (the URL to your Ollama instance). The AnythingLLM server will then send all model queries to Ollama’s API. Ollama is officially supported, and the docs note you can leverage it to run various open models (like Llama 2, Mistral, etc.) locally. In fact, the developers highlight that combining AnythingLLM with Ollama unlocks powerful offline RAG capabilities: Ollama handles the model inference, and AnythingLLM handles embeddings and the UI/agent logic.

Strengths: AnythingLLM provides a comprehensive solution for private Q&A and chat. Key strengths include: an easy setup for RAG (the heavy lifting of embedding and storing vectors is automated), multi-document support with clear citation of sources, and additional features like AI Agents (it has a no-code agent builder where you can create custom workflows and tool usage). It is also multi-user out of the box (especially in server mode), with user accounts and permissioning if needed. The interface is designed to be simple (chat box + sidebar of docs/workspaces) but powerful under the hood. For personal use, the desktop app is a big plus – you get a native-feeling app without needing to open a browser or run commands, and it stores data locally by default.

Limitations: Because it integrates many components (LLM API, embedding models, vector DB, etc.), AnythingLLM can be resource-intensive. When you ingest documents, it may take time and memory to generate embeddings (it even supports using Ollama itself or local models for embeddings with models like nomic-embed). The desktop app simplifies usage, but if you have a lot of documents or very large files, expect some heavy processing in the background. Another limitation is that advanced users might find it less configurable than assembling their own stack – for example, it currently uses LanceDB or Chroma; if you wanted a different vector store, you’d have to dive into config or code. Also, while multi-provider is supported, the interface is really geared towards one model at a time (you’d switch the global provider setting if you want to use a different model). In summary, AnythingLLM is an excellent out-of-the-box solution for local document chat, especially with Ollama, but it is a bigger application to run compared to minimal UIs.

Chat-with-Notes (Minimal Document Chat UI)

Chat-with-Notes is a minimalist application specifically built to chat with local text files using Ollama-managed models. It’s essentially a lightweight Flask web server that you run on your PC, providing a simple web page where you can upload a document and start a chat about it. The goal of this project is simplicity: it doesn’t have a lot of bells and whistles, but it does the core job of document-question answering with a local LLM.

Using Chat-with-Notes involves first making sure your Ollama instance is running a model (for example, you might start Ollama with ollama run llama2 or another model). Then you launch the Flask app (python app.py) and open the local website. The UI will prompt you to upload a file (supported formats include plain text, Markdown, code files, HTML, JSON, and PDFs). Once uploaded, the text content of the file is displayed, and you can ask questions or chat with the AI about that content. The conversation happens in a typical chat bubble format. If you upload a new file mid-conversation, the app will ask if you want to start a fresh chat or keep the current chat context and just add the new file’s info. This way, you can talk about multiple files sequentially if needed. There are also buttons to clear the chat or export the conversation to a text file.

Under the hood, Chat-with-Notes queries the Ollama API for generating responses. Ollama handles the model inference, and Chat-with-Notes just supplies the prompt (which includes relevant parts of the uploaded text). It does not use a vector database; instead, it simply sends the entire file content (or chunks of it) along with your question to the model. This approach works best for reasonably sized documents that fit in the model’s context window.

Strengths: The app is extremely simple to deploy and use. There’s no complex configuration – if you have Python and Ollama set up, you can get it running in a minute or two. The interface is clean and minimal, focusing on the text content and the Q&A. Because it’s so focused, it ensures that all data stays local and only in-memory (no external calls except to Ollama on localhost). It’s a great choice if you specifically want to chat with files and don’t need general conversation without a document.

Limitations: The minimalism of Chat-with-Notes means it lacks many features found in other UIs. For example, it doesn’t support using multiple models or providers (it’s Ollama-only by design), and it doesn’t maintain a long-term library of documents – you upload files as needed per session, and there’s no persistent vector index. Scaling to very large documents might be tricky without manual adjustments, since it may try to include a lot of text in the prompt. Also, the UI, while functional, is not as polished (no dark mode, no rich text formatting of responses, etc.). In essence, this tool is ideal for quick, one-off analyses of files with an Ollama model. If your needs grow (say, many documents, or desire for a fancier UI), you might outgrow Chat-with-Notes. But as a starting point or a personal “ask my PDF” solution on top of Ollama, it’s very effective.

Conclusion

Each of these open-source UIs can enhance your experience with local Ollama models by providing a user-friendly chat interface and extra capabilities like document question-answering. The best choice depends on your requirements and technical comfort:

- For quick setup and browser-based usage: Page Assist is a great choice, integrating directly into your web browsing with minimal fuss.

- For a full-featured web app environment: Open WebUI or LibreChat offer extensive features and multi-model flexibility, suitable for power users or multi-user setups.

- For a polished ChatGPT alternative with plugin potential: LobeChat provides a nice balance of usability and features in a self-hosted package.

- For document-focused interactions: AnythingLLM delivers an all-in-one solution (particularly if you like having a desktop app), whereas Chat-with-Notes offers a minimalistic approach for single-document Q&A.

All these tools being actively maintained, you can also look forward to improvements and community support. By choosing one of these UIs, you’ll be able to chat with your local Ollama-hosted models in a convenient way – whether it’s analyzing documents, coding with assistance, or just having conversational AI available without cloud dependencies. Each solution above is open-source, so you can further tailor them to your needs or even contribute to their development. To see how local Ollama plus these UIs fits with other local and cloud options, check our LLM Hosting: Local, Self-Hosted & Cloud Infrastructure Compared guide.

Happy chatting with your local LLM!