Self-hosting Perplexica - with Ollama

Running copilot-style service locally? Easy!

That’s very exciting! Instead of calling copilot or perplexity.ai and telling all the world what you are after, you can now host similar service on your own PC or laptop!

What it is

Perplexica is a system similar to Copilot and Perplexity.ai.

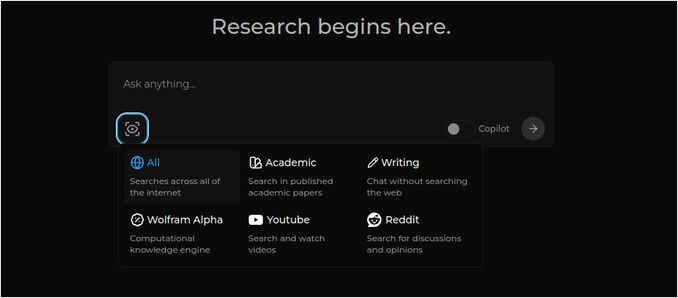

- You ask a question

- AI searches the internet for the answers (you can specify where to search: academic papers, writing, YouTube, Reddit…)

- Then AI summarises all it has found

- Then presents the result with the references to original web sites

- Also a list of images and youtube videos on the right

- Also follow-up questions ready for you to click, if you’d like to explore the topic a bit more

Those systems are hosted in the cloud and belong to some corporations (for example, Miscrosoft or Meta). Perplexica is an Open Source software you can host on your PC or poweful laptop.

Here we see the response of Perplexica with Chat model llama3.1 8b q6 and jina Embedding model - to the question

Here we see the response of Perplexica with Chat model llama3.1 8b q6 and jina Embedding model - to the question Who is Elon Mask?

Perplexica consist of several modules

- SearxNG - Metasearch engine. It will call 10+ other seach engines to get the results, so perplexica can combine them. SearxNG is very configurable on itself, you can turn on and off each engine and add new ones. But for our purposes default configuration works well.

- Perplexica Backend and Frontend. Technically these are two separate modules, one is providing API, another is UI

- Ollama Service - that is not a part of perplexica project, but if you want your LLMs hosted locally, Ollama the only way to use them.

So installation of whole system consists of two big steps:

- Install Ollama + download Ollama models

- Install Perplexica together with SearxNG

Installing Ollama

To begin with Ollama, follow these steps:

Install Ollama running the script:

curl -fsSL https://ollama.com/install.sh | sh

Tell Ollama to download your favorite LLM. If it is Llama3.1 8b q4 - run the script:

ollama pull llama3.1:latest

Pull the latest version of Nomic-Embed-Text to use as embegging model (if it is your favorite) using

ollama pull nomic-embed-text:latest

Edit the Ollama service file by running

sudo systemctl edit ollama.service

Adding the following lines to expose Ollama to the network (Perplexica needs to connect to it from inside docker)

[Service]

Environment="OLLAMA_HOST=0.0.0.0"

Reload the systemd daemon and restart Ollama service:

sudo systemctl daemon-reload

sudo systemctl restart ollama

Check that Ollama started successfully

systemctl status ollama.service

sudo journalctl -u ollama.service --no-pager

Detailed description how to install, update and configure Ollama please see: Install and configure Ollama

For the details on using other Ollama models with Perplexica please see section ‘Installing other Ollama models’ below.

Installing Perplexica

I’ve installed dockerised Perplexica on linux, but very similar docker-compose can be used on Windows or Mac.

Let’s go!

Getting Started with Docker (Recommended) Ensure Docker is installed and running on your system.

Clone the Perplexica repository:

git clone https://github.com/ItzCrazyKns/Perplexica.git

After cloning, navigate to the directory containing the project files.

cd Perplexica

Rename the sample.config.toml file to config.toml. If you intend to update Perplexica later - to git pull indo this repository - then just copy sample.config.toml file to config.toml

cp sample.config.toml config.toml

Edit config file

nano config.toml

For Docker setups, you need only fill in the following fields:

OLLAMA: Your Ollama API URL.

You should enter it as http://host.docker.internal:PORT_NUMBER.

If you installed Ollama on port 11434 (that’s a default one), use http://host.docker.internal:11434. For other ports, adjust accordingly.

When you are still in Perplexica directory, execute:

docker compose up -d

It will pull SearxNG and base node docker images, build two Perplexica docker images and start 3 containers. Wait a few minutes for the setup to complete.

You can access Perplexica at http://localhost:3000 in your web browser.

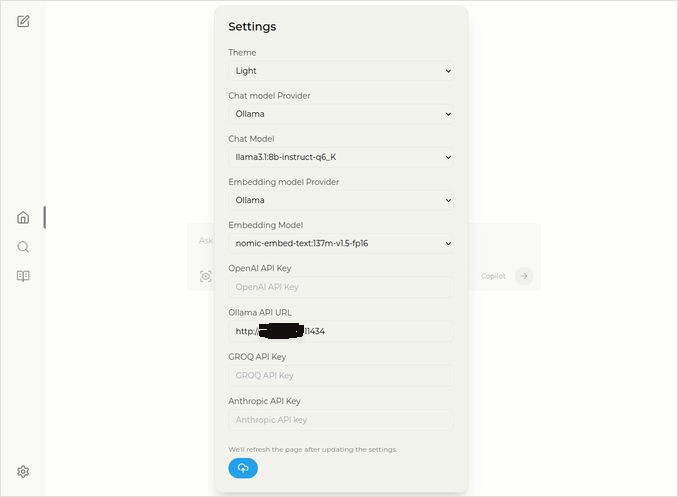

Go to Settings. You know - the Cog icon down left, and choose your Ollama models

Over here you see Chat model selected llama3.1:8b-instruct-q6_K (Llama 3.1 8b with quantization q6_K), and Embedding model nomic-embed-text:137m-v1.5-fp16.

You can also select Light or Dark theme whichever you like more.

Perplexica search options (Click on this eye in the box icon), in Dark theme:

Installing other Ollama models

You have already installed the models llama3.1:latest and nomic-embed-text:latest in the “Installing Ollama” section before.

You need only one model for chat, but there is plenty models available. They behave slightly different, it’s good to start with most common ones: Llama3.1, Gemma2, Mistral Nemo or Qwen2.

Chat models

Full name of chat model that you saw in the installation section - llama3.1:latest is llama3.1:8b-text-q4_0. That means it has 8 billion parameters and quantization 4_0. It’s fast and relatively small (4.8GB), but if your GPU has some more memory I would recommend you to try

- llama3.1:8b-instruct-q6_K (6.7GB) - in my tests it showed much better response, yet was a bit slower.

- llama3.1:8b-instruct-q8_0 (8.5GB) - or maybe this one.

Overall all the models from the llama3.1:8b group are relatively fast.

you can pull those I recommended to try with the scipt:

ollama pull llama3.1:8b-instruct-q6_K

ollama pull llama3.1:8b-instruct-q8_0

Comparing to Llama3.1:8b, the Gemma2 produces more conscise and artistic response. Try these:

# 9.8GB

ollama pull gemma2:9b-instruct-q8_0

# 14GB

ollama pull gemma2:27b-instruct-q3_K_L

The Mistral Nemo models are producing response somewhere in between gemma2 and llama3.1.

# the default one, 7.1GB

ollama pull mistral-nemo:12b-instruct-2407-q4_0

# 10GB

ollama pull mistral-nemo:12b-instruct-2407-q6_K

# 13GB

ollama pull mistral-nemo:12b-instruct-2407-q8_0

You might also want to try the Qwen2 models

# the default one, 4.4GB

ollama pull qwen2:7b-instruct-q4_0

# 8.1GB

ollama pull qwen2:7b-instruct-q8_0

The models I liked the most are: llama3.1:8b-instruct-q6_K and mistral-nemo:12b-instruct-2407-q8_0.

To check the models Ollama has in local repository:

ollama list

To remove some unneeded model:

ollama rm qwen2:7b-instruct-q4_0 # for example

Embedding models

You can skip installing these, the Perplexica has preinstalled 3 embedding models: BGE Small, GTE Small and Bert bilingual. They work not bad, but you might want to try other embedding models.

In Ollama installation section above you installed the nomic-embed-text:latest embedding model, it’s a good model, But I would recommend you to try also:

ollama pull jina/jina-embeddings-v2-base-en:latest

# and

ollama pull bge-m3:567m-fp16

I liked the result of jina/jina-embeddings-v2-base-en:latest them most, but see for youself.

Perplexica Network Install

If you install it on the network server, then before the

docker compose up -d

or if you are already running Perplexica, and need to rebuild the images

# Stop it and remove all the containers (!!! if you need it only)

docker compose down --rmi all

Put your perplexica server IP address into docker-compose.yaml: then before

nano docker-compose.yaml

perplexica-frontend:

build:

context: .

dockerfile: app.dockerfile

args:

- NEXT_PUBLIC_API_URL=http://127.0.0.1:3001/api # << here

- NEXT_PUBLIC_WS_URL=ws://127.0.0.1:3001 # << here

depends_on:

- perplexica-backend

now start Perplexica and SearxNG containers:

docker compose up -d

Or rebuild and start:

docker compose up -d --build

Updating Perplexica

Perplexica running on docker:

# Stop it and remove all the containers (!!! if you need it only)

docker compose down --rmi all

# navigate to the project folder

# where you clonned perplexica during installation

cd Perplexica

# pull the updates

git pull

# Update and Rebuild Docker Containers:

docker compose up -d --build

For non-docker installations please see: https://github.com/ItzCrazyKns/Perplexica/blob/master/docs/installation/UPDATING.md

FAQ

-

Q: What is Perplexica?

-

A: Perplexica is a free self-hosted AI search engine and an alternative to perplexity.ai and Copilot systems that allows users to run their own search engine locally on their computer.

-

Q: What are the steps to install and set up Perplexica with Ollama?

-

A: Steps include installing Ollama, pulling the models, then installing Perplexica.

-

Q: What customization options are available in Perplexica?

-

A: Options include choosing different models like LLama 3.1, Mistral Nemo or Gemma2, setting up local embedding models, and exploring various search options such as news, academic papers, YouTube videos and Reddit forums.

-

Q: Which Ollama model to use with Perplexica?

-

A: The best results we got in our tests were when we run Perplexica with llama3.1:8b-instruct-q6_K and jina/jina-embeddings-v2-base-en:latest.

Useful links

- Qwen3 Embedding & Reranker Models on Ollama: State-of-the-Art Performance

- Test: How Ollama is using Intel CPU Performance and Efficient Cores

- How Ollama Handles Parallel Requests

- Testing Deepseek-r1 on Ollama

- Installation instructions on Perplexica site: https://github.com/ItzCrazyKns/Perplexica

- Exposing Perplexica to network

- LLM speed performance comparison

- Install Ollama and Move Ollama Models to Different Folder

- Comparing LLM Summarising Abilities

- LLMs comparison: Mistral Small, Gemma 2, Qwen 2.5, Mistral Nemo, LLama3 and Phi

- Ollama cheatsheet