Self-Hosting Cognee: Choosing LLM on Ollama

Testing Cognee with local LLMs - real results

Cognee is a Python framework for building knowledge graphs from documents using LLMs. But does it work with self-hosted models?

I tested it with multiple local LLMs to find out.

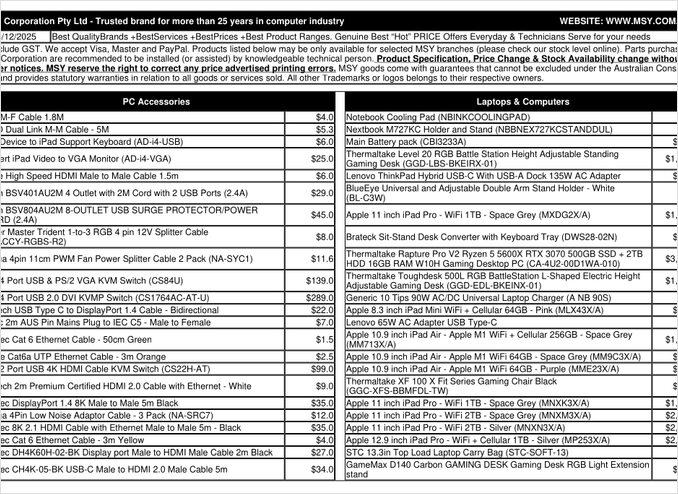

That’s a pricelist PDF page I have tried to process.

TL;DR

Cognee probably works nicely with smart LLMs with hundreds of billions of parameters, but for self-hosted RAG setups expected to automatically extract data from PDFs (like pricelists), it failed to deliver on my hardware. The framework’s heavy reliance on structured output makes it challenging for smaller local models to perform reliably.

What is Cognee?

Cognee is an open-source Python framework designed to build knowledge graphs from unstructured documents using LLMs. Unlike traditional RAG systems that simply chunk and embed documents, Cognee attempts to create a semantic understanding by extracting entities, relationships, and concepts into a graph database. This approach aligns with advanced RAG architectures like GraphRAG, which promises better contextual retrieval.

The framework supports multiple backends:

- Vector databases: LanceDB (default), with support for other vector stores

- Graph databases: Kuzu (default), allowing complex relationship queries

- LLM providers: OpenAI, Anthropic, Ollama, and others

- Structured output frameworks: BAML and Instructor for constrained generation

For self-hosting enthusiasts, Cognee’s compatibility with Ollama makes it attractive for local deployments. However, the devil is in the details - as we’ll see, structured output requirements create significant challenges for smaller models.

Why Structured Output Matters

Cognee relies heavily on structured output to extract information from documents in a consistent format. When processing a document, the LLM must return properly formatted JSON containing entities, relationships, and metadata. This is where many smaller models struggle.

If you’re working with structured output in your own projects, understanding these constraints is crucial. The challenges I encountered with Cognee mirror broader issues in the LLM ecosystem when working with local models.

Configuration Setup

Here’s my working configuration for Cognee with Ollama. Note the key settings that enable local operation:

TELEMETRY_DISABLED=1

# STRUCTURED_OUTPUT_FRAMEWORK="instructor"

STRUCTURED_OUTPUT_FRAMEWORK="BAML"

# LLM Configuration

LLM_API_KEY="ollama"

LLM_MODEL="gpt-oss:20b"

LLM_PROVIDER="ollama"

LLM_ENDPOINT="http://localhost:11434/v1"

# LLM_MAX_TOKENS="25000"

# Embedding Configuration

EMBEDDING_PROVIDER="ollama"

EMBEDDING_MODEL="avr/sfr-embedding-mistral:latest"

EMBEDDING_ENDPOINT="http://localhost:11434/api/embeddings"

EMBEDDING_DIMENSIONS=4096

HUGGINGFACE_TOKENIZER="Salesforce/SFR-Embedding-Mistral"

# BAML Configuration

BAML_LLM_PROVIDER="ollama"

BAML_LLM_MODEL="gpt-oss:20b"

BAML_LLM_ENDPOINT="http://localhost:11434/v1"

# Database Settings (defaults)

DB_PROVIDER="sqlite"

VECTOR_DB_PROVIDER="lancedb"

GRAPH_DATABASE_PROVIDER="kuzu"

# Auth

REQUIRE_AUTHENTICATION=False

ENABLE_BACKEND_ACCESS_CONTROL=False

Key Configuration Choices

Structured Output Framework: I tested BAML, which provides better control over output schemas compared to basic prompting. BAML is designed specifically for structured LLM outputs, making it a natural fit for knowledge graph extraction tasks.

LLM Provider: Using Ollama’s OpenAI-compatible API endpoint (/v1) allows Cognee to treat it like any other OpenAI-style service.

Embedding Model: The SFR-Embedding-Mistral model (4096 dimensions) provides high-quality embeddings. For more on embedding model selection and performance, the Qwen3 embedding models offer excellent alternatives with strong multilingual capabilities.

Databases: SQLite for metadata, LanceDB for vectors, and Kuzu for the knowledge graph keep everything local without external dependencies.

Install Cognee

Installation is straightforward using uv (or pip). I recommend using uv for faster dependency resolution:

uv venv && source .venv/bin/activate

uv pip install cognee[ollama]

uv pip install cognee[baml]

uv pip install cognee[instructor]

uv sync --extra scraping

uv run playwright install

sudo apt-get install libavif16

The [ollama], [baml], and [instructor] extras install the necessary dependencies for local LLM operation and structured output. The scraping extra adds web scraping capabilities, while Playwright enables JavaScript-rendered page processing.

Example Code and Usage

Here’s the basic workflow for processing documents with Cognee. First, we add documents and build the knowledge graph:

msy-add.py:

import cognee

import asyncio

async def main():

# Create a clean slate for cognee -- reset data and system state

await cognee.prune.prune_data()

await cognee.prune.prune_system(metadata=True)

# Add sample content

await cognee.add(

"/home/rg/prj/prices/msy_parts_price_20251224.pdf",

node_set=["price_list", "computer_parts", "2025-12-24", "aud"]

)

# Process with LLMs to build the knowledge graph

await cognee.cognify()

if __name__ == '__main__':

asyncio.run(main())

The node_set parameter provides semantic tags that help categorize the document in the knowledge graph. The cognify() method is where the magic (or problems) happen - it sends document chunks to the LLM for entity and relationship extraction.

msy-search.py:

import cognee

import asyncio

async def main():

# Search the knowledge graph

results = await cognee.search(

query_text="What products are in the price list?"

# query_text="What is the average price for 32GB RAM (2x16GB modules)?"

)

# Print

for result in results:

print(result)

if __name__ == '__main__':

asyncio.run(main())

Unlike traditional vector search in RAG systems, Cognee queries the knowledge graph, theoretically enabling more sophisticated relationship-based retrieval. This is similar to how advanced RAG architectures work, but requires the initial graph construction to succeed.

Test Results: LLMs Performance

I tested Cognee with a real-world use case: extracting product information from a computer parts price list PDF. This seemed like an ideal scenario - structured data in a tabular format. Here’s what happened with each model:

Models Tested

1. gpt-oss:20b (20 billion parameters)

- Result: Failed with character encoding errors

- Issue: Returned malformed structured output with incorrect character codes

- Note: Despite being specifically designed for open-source compatibility, it couldn’t maintain consistent JSON formatting

2. qwen3:14b (14 billion parameters)

- Result: Failed to produce structured output

- Issue: Model would generate text but not in the required JSON schema

- Note: Qwen models typically perform well, but this task exceeded its structured output capabilities

3. deepseek-r1:14b (14 billion parameters)

- Result: Failed to produce structured output

- Issue: Similar to qwen3, couldn’t adhere to the BAML schema requirements

- Note: The reasoning capabilities didn’t help with format compliance

4. devstral:24b (24 billion parameters)

- Result: Failed to produce structured output

- Issue: Even with more parameters, couldn’t consistently generate valid JSON

- Note: Specialized coding model still struggled with strict schema adherence

5. ministral-3:14b (14 billion parameters)

- Result: Failed to produce structured output

- Issue: Mistral’s smaller model couldn’t handle the structured output demands

6. qwen3-vl:30b-a3b-instruct (30 billion parameters)

- Result: Failed to produce structured output

- Issue: Vision capabilities didn’t help with PDF table extraction in this context

7. gpt-oss:120b (120 billion parameters)

- Result: Didn’t complete processing after 2+ hours

- Hardware: Consumer GPU setup

- Issue: Model was too large for practical self-hosted use, even if it might have worked eventually

Key Findings

Chunk Size Limitation: Cognee uses 4k token chunks when processing documents with Ollama. For complex documents or models with larger context windows, this seems unnecessarily restrictive. The framework doesn’t provide an easy way to adjust this parameter.

Structured Output Requirements: The core issue isn’t model intelligence but format compliance. These models can understand the content, but maintaining consistent JSON schema throughout the extraction process proves challenging. This aligns with broader challenges in getting local models to respect output constraints.

Hardware Considerations: Even when a sufficiently large model might work (like gpt-oss:120b), the hardware requirements make it impractical for most self-hosting scenarios. You’d need significant GPU memory and processing power.

Comparison with Structured Output Best Practices

This experience reinforces lessons from working with structured output across different LLM providers. Commercial APIs from OpenAI, Anthropic, and Google often have built-in mechanisms to enforce output schemas, while local models require more sophisticated approaches like grammar-based sampling or multiple validation passes.

For a deeper analysis of choosing the right LLM for Cognee on Ollama, including detailed comparisons of different model sizes and their performance characteristics, there are comprehensive guides available that can help you make an informed decision.

Alternative Approaches for Self-Hosted RAG

If you’re committed to self-hosting and need to extract structured data from documents, consider these alternatives:

1. Traditional RAG with Simpler Extraction

Instead of building a complex knowledge graph upfront, use traditional RAG with document chunking and vector search. For structured data extraction:

- Parse tables directly using libraries like

pdfplumberortabula-py - Use simpler prompts that don’t require strict schema adherence

- Implement post-processing validation in Python rather than relying on LLM output format

2. Specialized Embedding Models

The quality of your embeddings significantly impacts retrieval performance. Consider using high-performing embedding models that work well locally. Modern embedding models like Qwen3’s offerings provide excellent multilingual support and can dramatically improve your RAG system’s accuracy.

3. Reranking for Better Results

Even with simpler RAG architectures, adding a reranking step can significantly improve results. After initial vector search retrieval, a reranker model can better assess relevance. This two-stage approach often outperforms more complex single-stage systems, especially when working with constrained hardware.

4. Hybrid Search Strategies

Combining vector search with traditional keyword search (BM25) often yields better results than either alone. Many modern vector databases support hybrid search out of the box.

5. Consider Vector Store Alternatives

If you’re building a RAG system from scratch, evaluate different vector stores based on your needs. Options range from lightweight embedded databases to distributed systems designed for production scale.

Docker Deployment Considerations

For production self-hosting, containerizing your RAG setup simplifies deployment and scaling. When running Cognee or similar frameworks with Ollama:

# Run Ollama in a container

docker run -d --gpus=all -v ollama:/root/.ollama -p 11434:11434 --name ollama ollama/ollama

# Pull your models

docker exec -it ollama ollama pull gpt-oss:20b

# Configure Cognee to connect to the container endpoint

Make sure to properly configure GPU passthrough and volume mounts for model persistence.

Lessons Learned

1. Match Tools to Hardware: Cognee is designed for cloud-scale LLMs. If you’re self-hosting on consumer hardware, simpler architectures may be more practical.

2. Structured Output is Hard: Getting consistent schema compliance from local LLMs remains challenging. If your application critically depends on structured output, either use commercial APIs or implement robust validation and retry logic.

3. Test Early: Before committing to a framework, test it with your specific use case and hardware. What works in demos may not work at scale or with your documents.

4. Consider Hybrid Approaches: Use commercial APIs for complex extraction tasks and local models for simpler queries to balance cost and capability.

Related Reading

Structured Output with LLMs

Understanding structured output is crucial for frameworks like Cognee. These articles dive deep into getting consistent, schema-compliant responses from LLMs:

- Choosing the Right LLM for Cognee: Local Ollama Setup

- LLMs with Structured Output: Ollama, Qwen3 & Python or Go

- Structured output across popular LLM providers - OpenAI, Gemini, Anthropic, Mistral and AWS Bedrock

- Ollama GPT-OSS Structured Output Issues

RAG Architecture and Implementation

For alternative or complementary approaches to knowledge extraction and retrieval:

- Advanced RAG: LongRAG, Self-RAG and GraphRAG

- Vector Stores for RAG Comparison

- Building MCP Servers in Python: WebSearch & Scrape

Embedding and Reranking

Improving retrieval quality through better embeddings and reranking:

- Qwen3 Embedding & Reranker Models on Ollama: State-of-the-Art Performance

- Reranking with embedding models

- Reranking text documents with Ollama and Qwen3 Embedding model - in Go