Running FLUX.1-dev GGUF Q8 in Python

Speed-up FLUX.1-dev with GGUF quantization

FLUX.1-dev is a powerful text-to-image model that produces stunning results, but its 24GB+ memory requirement makes it challenging to run on many systems. GGUF quantization of FLUX.1-dev offers a solution, reducing memory usage by approximately 50% while maintaining excellent image quality.

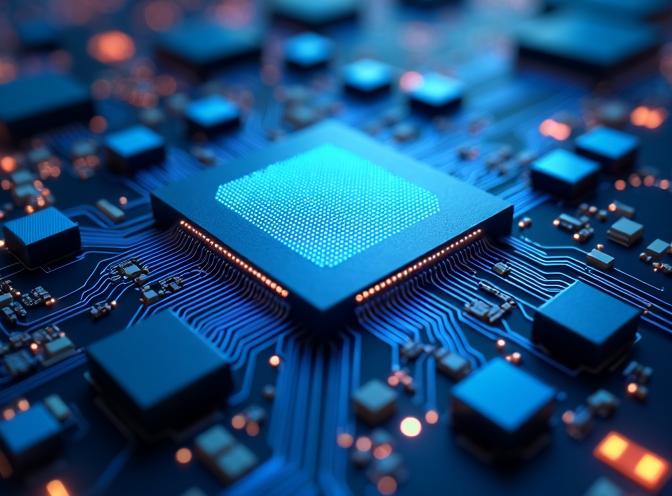

This image was generated using the Q8 quantized FLUX.1-dev model with GGUF format, demonstrating that quality is preserved even with reduced memory footprint.

This image was generated using the Q8 quantized FLUX.1-dev model with GGUF format, demonstrating that quality is preserved even with reduced memory footprint.

What is GGUF Quantization?

GGUF (GPT-Generated Unified Format) is a quantization format originally developed for language models but now supported for diffusion models like FLUX. Quantization reduces model size by storing weights in lower precision formats (8-bit, 6-bit, or 4-bit) instead of full 16-bit or 32-bit precision.

For FLUX.1-dev, the transformer component (the largest part of the model) can be quantized, reducing its memory footprint from approximately 24GB to 12GB with Q8_0 quantization, or even lower with more aggressive quantization levels.

Benefits of GGUF Quantization

The primary advantages of using GGUF quantized FLUX models include:

- Reduced Memory Usage: Cut VRAM requirements in half, making FLUX.1-dev accessible on more hardware

- Maintained Quality: Q8_0 quantization preserves image quality with minimal visible differences

- Faster Loading: Quantized models load faster due to smaller file sizes

- Lower Power Consumption: Reduced memory usage translates to lower power draw during inference

In our testing, the quantized model uses approximately 12-15GB VRAM compared to 24GB+ for the full model, while generation time remains similar.

Installation and Setup

To use GGUF quantized FLUX.1-dev, you’ll need the gguf package in addition to the standard diffusers dependencies. If you’re already using FLUX for text-to-image generation, you’re familiar with the base setup.

If you’re using uv as your Python package manager, you can install the required packages with:

uv pip install -U diffusers torch transformers gguf

Or with standard pip:

pip install -U diffusers torch transformers gguf

Implementation

The key difference when using GGUF quantized models is that you load the transformer separately using FluxTransformer2DModel.from_single_file() with GGUFQuantizationConfig, then pass it to the pipeline. If you need a quick reference for Python syntax, check the Python Cheatsheet. Here’s a complete working example:

import os

import torch

from diffusers import FluxPipeline, FluxTransformer2DModel, GGUFQuantizationConfig

# Paths

gguf_model_path = "/path/to/flux1-dev-Q8_0.gguf"

base_model_path = "/path/to/FLUX.1-dev-config" # Config files only

# Load GGUF quantized transformer

print(f"Loading GGUF quantized transformer from: {gguf_model_path}")

transformer = FluxTransformer2DModel.from_single_file(

gguf_model_path,

quantization_config=GGUFQuantizationConfig(compute_dtype=torch.bfloat16),

config=base_model_path,

subfolder="transformer",

torch_dtype=torch.bfloat16,

)

# Create pipeline with quantized transformer

print(f"Creating pipeline with base model: {base_model_path}")

pipe = FluxPipeline.from_pretrained(

base_model_path,

transformer=transformer,

torch_dtype=torch.bfloat16,

)

# Enable CPU offloading (required for GGUF)

pipe.enable_model_cpu_offload()

# Note: enable_sequential_cpu_offload() is NOT compatible with GGUF

# Generate image

prompt = "A futuristic cityscape at sunset with neon lights"

image = pipe(

prompt,

height=496,

width=680,

guidance_scale=3.5,

num_inference_steps=60,

max_sequence_length=512,

generator=torch.Generator("cpu").manual_seed(42)

).images[0]

image.save("output.jpg")

Important Considerations

Model Configuration Files

When using GGUF quantization, you still need the model configuration files from the original FLUX.1-dev model. These include:

model_index.json- Pipeline structure- Component configs (transformer, text_encoder, text_encoder_2, vae, scheduler)

- Tokenizer files

- Text encoder and VAE weights (these are not quantized)

The transformer weights come from the GGUF file, but all other components require the original model files.

CPU Offloading Compatibility

Important: enable_sequential_cpu_offload() is not compatible with GGUF quantized models and will cause a KeyError: None error. I’m just using enable_model_cpu_offload() instead when working with quantized transformers.

Quantization Levels

Available quantization levels for FLUX.1-dev include:

- Q8_0: Best quality, ~14-15GB memory (recommended)

- Q6_K: Good balance, ~12GB memory

- Q4_K: Maximum compression, ~8GB memory (I assume it affects the quality not in a good way)

For most use cases, Q8_0 provides the best balance between memory savings and image quality.

Performance Comparison

In our testing with identical prompts and settings:

| Model | VRAM Usage | Generation Time | Quality |

|---|---|---|---|

| Full FLUX.1-dev | 24GB? | I don’t have a GPU that big | Excellent (I think) |

| Full FLUX.1-dev | ~3GB with sequential_cpu_offload() | ~329s | Excellent |

| GGUF Q8_0 | ~14-15GB | ~98s !!! | Excellent |

| GGUF Q6_K | ~10-12GB | ~116s | Very Good |

The quantized model, because it requires less CPU offload now, has more then 3 times faster generation speed while using significantly less memory, making it practical for systems with limited VRAM.

I tested both models with the prompt

A futuristic close-up of a transformer inference ASIC chip with intricate circuitry, glowing blue light emitting from dense matrix multiply units and low-precision ALUs, surrounded by on-chip SRAM buffers and quantization pipelines, rendered in hyper-detailed photorealistic style with a cold, clinical lighting scheme.

The sample output of FLUX.1-dev Q8 is the cover image to this post - see above.

The sample output of non-quantized FLUX.1-dev is below:

I don’t see much of the difference in quality.

Conclusion

GGUF quantization makes FLUX.1-dev accessible to a broader range of hardware while maintaining the high-quality image generation the model is known for. By reducing memory requirements by approximately 50%, you can run state-of-the-art text-to-image generation on more affordable hardware without significant quality loss.

The implementation is straightforward with the diffusers library, requiring only minor changes to the standard FLUX pipeline setup. For most users, Q8_0 quantization provides the optimal balance between memory efficiency and image quality.

If you’re working with FLUX.1-Kontext-dev for image augmentation, similar quantization techniques may become available in the future.

Related Articles

- Flux text to image - guide to using FLUX.1-dev for text-to-image generation

- FLUX.1-Kontext-dev: Image Augmentation AI Model

- uv - New Python Package, Project, and Environment Manager

- Python Cheatsheet

References

- HuggingFace Diffusers GGUF Documentation - Official documentation on using GGUF with diffusers

- Unsloth FLUX.1-dev-GGUF - Pre-quantized GGUF models for FLUX.1-dev

- Black Forest Labs FLUX.1-dev - Original FLUX.1-dev model repository

- GGUF Format Specification - Technical details on the GGUF format