Constraining LLMs with Structured Output: Ollama, Qwen3 & Python or Go

A couple of ways to get structured output from Ollama

Large Language Models (LLMs) are powerful, but in production we rarely want free-form paragraphs. Instead, we want predictable data: attributes, facts, or structured objects you can feed into an app. That’s LLM Structured Output.

Some time ago Ollama introduced structured output support (announcement), making it possible to constrain a model’s responses to match a JSON schema. This unlocks consistent data extraction pipelines for tasks like cataloging LLM features, benchmarking models, or automating system integration.

In this post, we’ll cover:

- What structured output is and why it matters

- Simple way to get structured output from LLMs

- How Ollama’s new feature works

- Examples of extracting LLM capabilities:

What Is Structured Output?

Normally, LLMs generate free text:

“Model X supports reasoning with chain-of-thought, has a 200K context window, and speaks English, Chinese, and Spanish.”

That’s readable, but hard to parse.

Instead, with structured output we ask for a strict schema:

{

"name": "Model X",

"supports_thinking": true,

"max_context_tokens": 200000,

"languages": ["English", "Chinese", "Spanish"]

}

This JSON is easy to validate, store in a database, or feed to a UI.

Simple way of getting Structured Output from LLM

The LLMs sometimes understand what the schema is and we can ask LLM to return output in JSON using particular schema. Qwen3 model from Alibaba is optimized for reasoning and structured responses. You can explicitly instruct it to respond in JSON.

Example 1: Using Qwen3 with ollama in Python, requesting JSON with schema

import json

import ollama

prompt = """

You are a structured data extractor.

Return JSON only.

Text: "Elon Musk is 53 and lives in Austin."

Schema: { "name": string, "age": int, "city": string }

"""

response = ollama.chat(model="qwen3", messages=[{"role": "user", "content": prompt}])

output = response['message']['content']

# Parse JSON

try:

data = json.loads(output)

print(data)

except Exception as e:

print("Error parsing JSON:", e)

Output:

{"name": "Elon Musk", "age": 53, "city": "Austin"}

Enforcing Schema Validation with Pydantic

To avoid malformed outputs, you can validate against a Pydantic schema in Python.

from pydantic import BaseModel

class Person(BaseModel):

name: str

age: int

city: str

# Suppose 'output' is the JSON string from Qwen3

data = Person.model_validate_json(output)

print(data.name, data.age, data.city)

This ensures the output conforms to the expected structure.

Ollama’s Structured Output

Ollama now lets you pass a schema in the format parameter. The model is then constrained to respond only in JSON that conforms to the schema (docs).

In Python, you typically define your schema with Pydantic and let Ollama use that as a JSON schema.

Example 2: Extract LLM Feature Metadata

Suppose you have a text snippet describing an LLM’s abilities:

“Qwen3 has strong multilingual support (English, Chinese, French, Spanish, Arabic). It allows reasoning steps (chain-of-thought). The context window is 128K tokens.”

You want structured data:

from pydantic import BaseModel

from typing import List

from ollama import chat

class LLMFeatures(BaseModel):

name: str

supports_thinking: bool

max_context_tokens: int

languages: List[str]

prompt = """

Analyze the following description and return the model’s features in JSON only.

Model description:

'Qwen3 has strong multilingual support (English, Chinese, French, Spanish, Arabic).

It allows reasoning steps (chain-of-thought).

The context window is 128K tokens.'

"""

resp = chat(

model="qwen3",

messages=[{"role": "user", "content": prompt}],

format=LLMFeatures.model_json_schema(),

options={"temperature": 0},

)

print(resp.message.content)

Possible output:

{

"name": "Qwen3",

"supports_thinking": true,

"max_context_tokens": 128000,

"languages": ["English", "Chinese", "French", "Spanish", "Arabic"]

}

Example 3: Compare Multiple Models

Feed in descriptions of multiple models and extract into structured form:

from typing import List

class ModelComparison(BaseModel):

models: List[LLMFeatures]

prompt = """

Extract features of each model into JSON.

1. Llama 3.1 supports reasoning. Context window is 128K. Languages: English only.

2. GPT-4 Turbo supports reasoning. Context window is 128K. Languages: English, Japanese.

3. Qwen3 supports reasoning. Context window is 128K. Languages: English, Chinese, French, Spanish, Arabic.

"""

resp = chat(

model="qwen3",

messages=[{"role": "user", "content": prompt}],

format=ModelComparison.model_json_schema(),

options={"temperature": 0},

)

print(resp.message.content)

Output:

{

"models": [

{

"name": "Llama 3.1",

"supports_thinking": true,

"max_context_tokens": 128000,

"languages": ["English"]

},

{

"name": "GPT-4 Turbo",

"supports_thinking": true,

"max_context_tokens": 128000,

"languages": ["English", "Japanese"]

},

{

"name": "Qwen3",

"supports_thinking": true,

"max_context_tokens": 128000,

"languages": ["English", "Chinese", "French", "Spanish", "Arabic"]

}

]

}

This makes it trivial to benchmark, visualize, or filter models by their features.

Example 4: Detect Gaps Automatically

You can even allow null values when a field is missing:

from typing import Optional

class FlexibleLLMFeatures(BaseModel):

name: str

supports_thinking: Optional[bool]

max_context_tokens: Optional[int]

languages: Optional[List[str]]

This ensures your schema remains valid even if some information is unknown.

Benefits, Caveats & Best Practices

Using structured output through Ollama (or any system that supports it) offers many advantages — but also has some caveats.

Benefits

- Stronger guarantees: The model is asked to conform to a JSON schema rather than free-form text.

- Easier parsing: You can directly

json.loadsor validate with Pydantic / Zod, rather than regex or heuristics. - Schema-based evolution: You can version your schema, add fields (with defaults), and maintain backward compatibility.

- Interoperability: Downstream systems expect structured data.

- Determinism (better with low temperature): When temperature is low (e.g., 0), the model is more likely to rigidly stick to the schema. Ollama’s docs recommend this.

Caveats & Pitfalls

- Schema mismatch: The model might still deviate—e.g. miss a required property, reorder keys, or include extra fields. You need validation.

- Complex schemas: Very deep or recursive JSON schemas might confuse the model or lead to failures.

- Ambiguity in prompt: If your prompt is vague, the model may guess fields or units incorrectly.

- Inconsistency across models: Some models may be better or worse at honoring structured constraints.

- Token limits: The schema itself adds token cost to the prompt or API call.

Best Practices & Tips (drawn from Ollama’s blog + experience)

- Use Pydantic (Python) or Zod (JavaScript) to define your schemas and auto-generate JSON schemas. This avoids manual errors.

- Always include instructions like “respond in JSON only” or “do not include commentary or extra text” in your prompt.

- Use temperature = 0 (or very low) to minimize randomness and maximize schema adherence. Ollama recommends determinism.

- Validate and potentially fallback (e.g. retry or clean up) when parsing JSON fails or schema validation fails.

- Start with a simpler schema, then gradually extend. Don’t overcomplicate initially.

- Include helpful but constrained error instructions: e.g. if the model cannot fill a required field, respond with

nullrather than omit it (if your schema allows).

Go Example 1: Extracting LLM Features

Here’s a simple Go program that asks Qwen3 for structured output about an LLM’s features.

package main

import (

"context"

"encoding/json"

"fmt"

"log"

"github.com/ollama/ollama/api"

)

type LLMFeatures struct {

Name string `json:"name"`

SupportsThinking bool `json:"supports_thinking"`

MaxContextTokens int `json:"max_context_tokens"`

Languages []string `json:"languages"`

}

func main() {

client, err := api.ClientFromEnvironment()

if err != nil {

log.Fatal(err)

}

prompt := `

Analyze the following description and return the model’s features in JSON only.

Description:

"Qwen3 has strong multilingual support (English, Chinese, French, Spanish, Arabic).

It allows reasoning steps (chain-of-thought).

The context window is 128K tokens."

`

// Define the JSON schema for structured output

formatSchema := map[string]any{

"type": "object",

"properties": map[string]any{

"name": map[string]string{

"type": "string",

},

"supports_thinking": map[string]string{

"type": "boolean",

},

"max_context_tokens": map[string]string{

"type": "integer",

},

"languages": map[string]any{

"type": "array",

"items": map[string]string{

"type": "string",

},

},

},

"required": []string{"name", "supports_thinking", "max_context_tokens", "languages"},

}

// Convert schema to JSON

formatJSON, err := json.Marshal(formatSchema)

if err != nil {

log.Fatal("Failed to marshal format schema:", err)

}

req := &api.GenerateRequest{

Model: "qwen3:8b",

Prompt: prompt,

Format: formatJSON,

Options: map[string]any{"temperature": 0},

}

var features LLMFeatures

var rawResponse string

err = client.Generate(context.Background(), req, func(response api.GenerateResponse) error {

// Accumulate content as it streams

rawResponse += response.Response

// Only parse when the response is complete

if response.Done {

if err := json.Unmarshal([]byte(rawResponse), &features); err != nil {

return fmt.Errorf("JSON parse error: %v", err)

}

}

return nil

})

if err != nil {

log.Fatal(err)

}

fmt.Printf("Parsed struct: %+v\n", features)

}

To compile and run this example Go program - let’s assume we have this main.go file in a folder ollama-struct,

We need to execute inside this folder:

# initialise module

go mod init ollama-struct

# pull all the dependencise

go mod tidy

# build & execute

go build -o ollama-struct main.go

./ollama-struct

Example Output

Parsed struct: {Name:Qwen3 SupportsThinking:true MaxContextTokens:128000 Languages:[English Chinese French Spanish Arabic]}

Go Example 2: Comparing Multiple Models

You can extend this to extract a list of models for comparison.

type ModelComparison struct {

Models []LLMFeatures `json:"models"`

}

prompt = `

Extract features from the following model descriptions and return as JSON:

1. PaLM 2: This model has limited reasoning capabilities and focuses on basic language understanding. It supports a context window of 8,000 tokens. It primarily supports English language only.

2. LLaMA 2: This model has moderate reasoning abilities and can handle some logical tasks. It can process up to 4,000 tokens in its context. It supports English, Spanish, and Italian languages.

3. Codex: This model has strong reasoning capabilities specifically for programming and code analysis. It has a context window of 16,000 tokens. It supports English, Python, JavaScript, and Java languages.

Return a JSON object with a "models" array containing all models.

`

// Define the JSON schema for model comparison

comparisonSchema := map[string]any{

"type": "object",

"properties": map[string]any{

"models": map[string]any{

"type": "array",

"items": map[string]any{

"type": "object",

"properties": map[string]any{

"name": map[string]string{

"type": "string",

},

"supports_thinking": map[string]string{

"type": "boolean",

},

"max_context_tokens": map[string]string{

"type": "integer",

},

"languages": map[string]any{

"type": "array",

"items": map[string]string{

"type": "string",

},

},

},

"required": []string{"name", "supports_thinking", "max_context_tokens", "languages"},

},

},

},

"required": []string{"models"},

}

// Convert schema to JSON

comparisonFormatJSON, err := json.Marshal(comparisonSchema)

if err != nil {

log.Fatal("Failed to marshal comparison schema:", err)

}

req = &api.GenerateRequest{

Model: "qwen3:8b",

Prompt: prompt,

Format: comparisonFormatJSON,

Options: map[string]any{"temperature": 0},

}

var comp ModelComparison

var comparisonResponse string

err = client.Generate(context.Background(), req, func(response api.GenerateResponse) error {

// Accumulate content as it streams

comparisonResponse += response.Response

// Only parse when the response is complete

if response.Done {

if err := json.Unmarshal([]byte(comparisonResponse), &comp); err != nil {

return fmt.Errorf("JSON parse error: %v", err)

}

}

return nil

})

if err != nil {

log.Fatal(err)

}

for _, m := range comp.Models {

fmt.Printf("%s: Context=%d, Languages=%v\n", m.Name, m.MaxContextTokens, m.Languages)

}

Example Output

PaLM 2: Context=8000, Languages=[English]

LLaMA 2: Context=4000, Languages=[English Spanish Italian]

Codex: Context=16000, Languages=[English Python JavaScript Java]

By the way, qwen3:4b on these examples works well, the same as qwen3:8b.

Best Practices for Go Developers

- Set temperature to 0 for maximum schema adherence.

- Validate with

json.Unmarshaland fallback if parsing fails. - Keep schemas simple — deeply nested or recursive JSON structures may cause issues.

- Allow optional fields (use

omitemptyin Go struct tags) if you expect missing data. - Add retries if the model occasionally emits invalid JSON.

Full example - Drawing a Chart with LLM Specs (Step-by-step: from structured JSON to comparison tables)

- Define a schema for the data you want

Use Pydantic so you can both (a) generate a JSON Schema for Ollama and (b) validate the model’s response.

from pydantic import BaseModel

from typing import List, Optional

class LLMFeatures(BaseModel):

name: str

supports_thinking: bool

max_context_tokens: int

languages: List[str]

- Ask Ollama to return only JSON in that shape

Pass the schema in format= and turn temperature down for determinism.

from ollama import chat

prompt = """

Extract features for each model. Return JSON only matching the schema.

1) Qwen3 supports chain-of-thought; 128K context; English, Chinese, French, Spanish, Arabic.

2) Llama 3.1 supports chain-of-thought; 128K context; English.

3) GPT-4 Turbo supports chain-of-thought; 128K context; English, Japanese.

"""

resp = chat(

model="qwen3",

messages=[{"role": "user", "content": prompt}],

format={"type": "array", "items": LLMFeatures.model_json_schema()},

options={"temperature": 0}

)

raw_json = resp.message.content # JSON list of LLMFeatures

- Validate & normalize

Always validate before using in production.

from pydantic import TypeAdapter

adapter = TypeAdapter(list[LLMFeatures])

models = adapter.validate_json(raw_json) # -> list[LLMFeatures]

- Build a comparison table (pandas)

Turn your validated objects into a DataFrame you can sort/filter and export.

import pandas as pd

df = pd.DataFrame([m.model_dump() for m in models])

df["languages_count"] = df["languages"].apply(len)

df["languages"] = df["languages"].apply(lambda xs: ", ".join(xs))

# Reorder columns for readability

df = df[["name", "supports_thinking", "max_context_tokens", "languages_count", "languages"]]

# Save as CSV for further use

df.to_csv("llm_feature_comparison.csv", index=False)

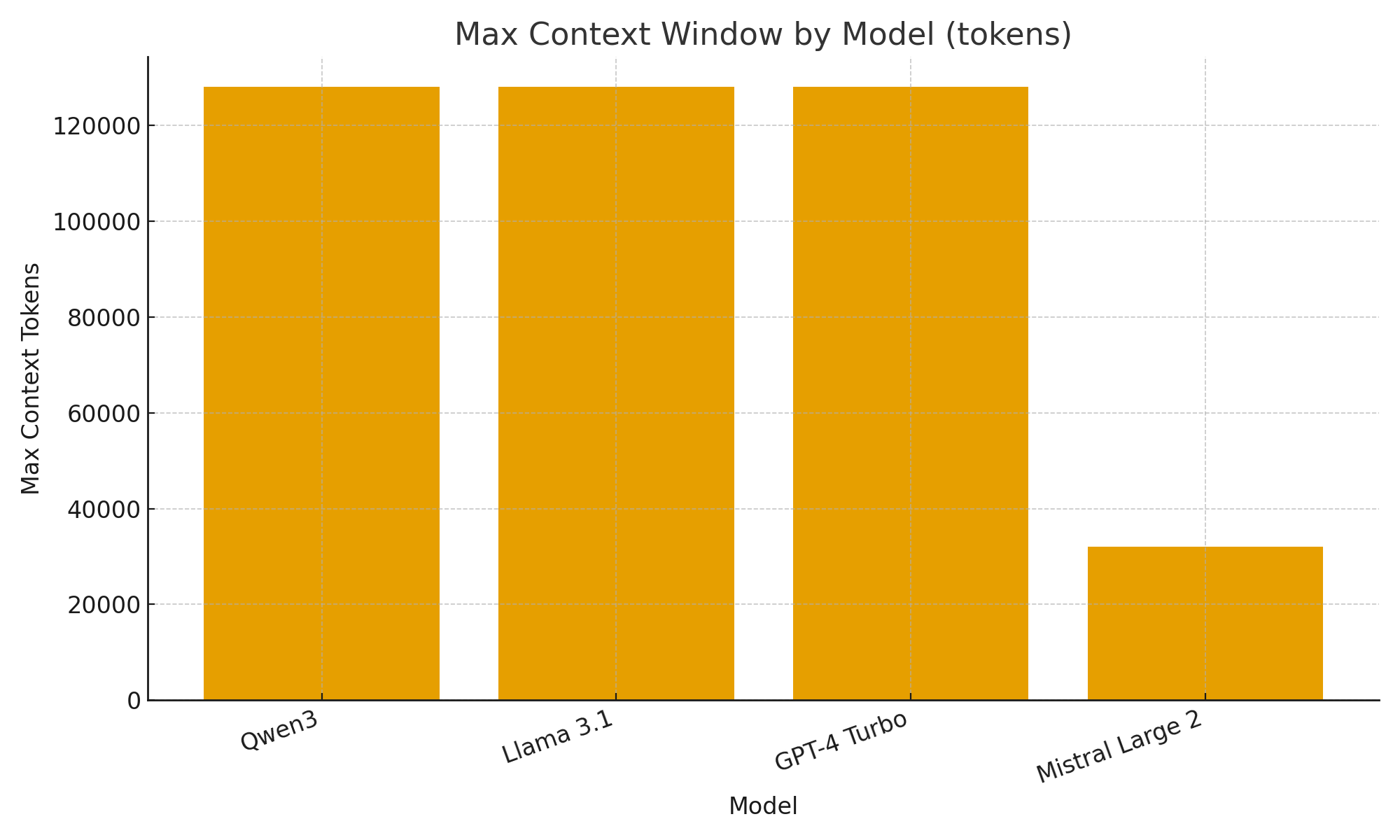

- (Optional) Quick visuals

Simple charts help you eyeball differences between models quickly.

import matplotlib.pyplot as plt

plt.figure()

plt.bar(df["name"], df["max_context_tokens"])

plt.title("Max Context Window by Model (tokens)")

plt.xlabel("Model")

plt.ylabel("Max Context Tokens")

plt.xticks(rotation=20, ha="right")

plt.tight_layout()

plt.savefig("max_context_window.png")

TL;DR

With Ollama’s new structured output support, you can treat LLMs not just as chatbots but as data extraction engines.

The examples above showed how to automatically extract structured metadata about LLM features like thinking support, context window size, and supported languages — tasks that would otherwise require brittle parsing.

Whether you’re building an LLM model catalog, an evaluation dashboard, or an AI-powered research assistant, structured outputs make integration smooth, reliable, and production-ready.

Useful links

- https://ollama.com/blog/structured-outputs

- Ollama cheatsheet

- Python Cheatsheet

- AWS SAM + AWS SQS + Python PowerTools

- Golang Cheatsheet

- Comparing Go ORMs for PostgreSQL: GORM vs Ent vs Bun vs sqlc

- Reranking text documents with Ollama and Qwen3 Embedding model - in Go

- AWS lambda performance: JavaScript vs Python vs Golang