Docker Model Runner vs Ollama: Which to Choose?

Compare Docker Model Runner and Ollama for local LLM

Running large language models (LLMs) locally has become increasingly popular for privacy, cost control, and offline capabilities. The landscape shifted significantly in April 2025 when Docker introduced Docker Model Runner (DMR), its official solution for AI model deployment.

Now three approaches compete for developer mindshare: Docker’s native Model Runner, third-party containerized solutions (vLLM, TGI), and the standalone Ollama platform.

Understanding Docker Model Runners

Docker-based model runners use containerization to package LLM inference engines along with their dependencies. The landscape includes both Docker’s official solution and third-party frameworks.

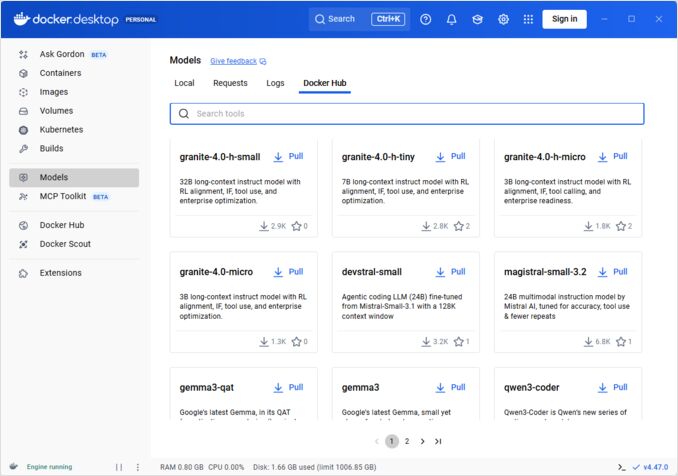

Docker Model Runner (DMR) - Official Solution

In April 2025, Docker introduced Docker Model Runner (DMR), an official product designed to simplify running AI models locally using Docker’s infrastructure. This represents Docker’s commitment to making AI model deployment as seamless as container deployment.

Key Features of DMR:

- Native Docker Integration: Uses familiar Docker commands (

docker model pull,docker model run,docker model package) - OCI Artifact Packaging: Models are packaged as OCI Artifacts, enabling distribution through Docker Hub and other registries

- OpenAI-Compatible API: Drop-in replacement for OpenAI endpoints, simplifying integration

- GPU Acceleration: Native GPU support without complex nvidia-docker configuration

- GGUF Format Support: Works with popular quantized model formats

- Docker Compose Integration: Easily configure and deploy models using standard Docker tooling

- Testcontainers Support: Seamlessly integrates with testing frameworks

Installation:

- Docker Desktop: Enable via AI tab in settings

- Docker Engine: Install

docker-model-pluginpackage

Example Usage:

# Pull a model from Docker Hub

docker model pull ai/smollm2

# Run inference

docker model run ai/smollm2 "Explain Docker Model Runner"

# Package custom model

docker model package --gguf /path/to/model.gguf --push myorg/mymodel:latest

DMR partners with Google, Hugging Face, and VMware Tanzu to expand the AI model ecosystem available through Docker Hub. If you’re new to Docker or need a refresher on Docker commands, our Docker Cheatsheet provides a comprehensive guide to essential Docker operations.

Third-Party Docker Solutions

Beyond DMR, the ecosystem includes established frameworks:

- vLLM containers: High-throughput inference server optimized for batch processing

- Text Generation Inference (TGI): Hugging Face’s production-ready solution

- llama.cpp containers: Lightweight C++ implementation with quantization

- Custom containers: Wrapping PyTorch, Transformers, or proprietary frameworks

Advantages of Docker Approach

Flexibility and Framework Agnostic: Docker containers can run any LLM framework, from PyTorch to ONNX Runtime, giving developers complete control over the inference stack.

Resource Isolation: Each container operates in isolated environments with defined resource limits (CPU, memory, GPU), preventing resource conflicts in multi-model deployments.

Orchestration Support: Docker integrates seamlessly with Kubernetes, Docker Swarm, and cloud platforms for scaling, load balancing, and high availability.

Version Control: Different model versions or frameworks can coexist on the same system without dependency conflicts.

Disadvantages of Docker Approach

Complexity: Requires understanding of containerization, volume mounts, network configuration, and GPU passthrough (nvidia-docker).

Overhead: While minimal, Docker adds a thin abstraction layer that slightly impacts startup time and resource usage.

Configuration Burden: Each deployment requires careful configuration of Dockerfiles, environment variables, and runtime parameters.

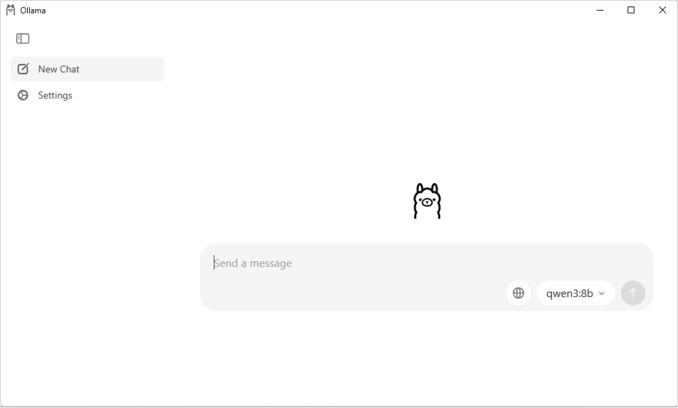

Understanding Ollama

Ollama is a purpose-built application for running LLMs locally, designed with simplicity as its core principle. It provides:

- Native binary for Linux, macOS, and Windows

- Built-in model library with one-command installation

- Automatic GPU detection and optimization

- RESTful API compatible with OpenAI’s format

- Model context and state management

Advantages of Ollama

Simplicity: Installation is straightforward (curl | sh on Linux), and running models requires just ollama run llama2. For a comprehensive list of Ollama commands and usage patterns, check out our Ollama cheatsheet.

Optimized Performance: Built on llama.cpp, Ollama is highly optimized for inference speed with quantization support (Q4, Q5, Q8).

Model Management: Built-in model registry with commands like ollama pull, ollama list, and ollama rm simplifies model lifecycle.

Developer Experience: Clean API, extensive documentation, and growing ecosystem of integrations (LangChain, CrewAI, etc.). Ollama’s versatility extends to specialized use cases like reranking text documents with embedding models.

Resource Efficiency: Automatic memory management and model unloading when idle conserves system resources.

Disadvantages of Ollama

Framework Lock-in: Primarily supports llama.cpp-compatible models, limiting flexibility for frameworks like vLLM or custom inference engines.

Limited Customization: Advanced configurations (custom quantization, specific CUDA streams) are less accessible than in Docker environments.

Orchestration Challenges: While Ollama can run in containers, it lacks native support for advanced orchestration features like horizontal scaling.

Performance Comparison

Inference Speed

Docker Model Runner: Performance comparable to Ollama as both support GGUF quantized models. For Llama 2 7B (Q4), expect 20-30 tokens/second on CPU and 50-80 tokens/second on mid-range GPUs. Minimal container overhead.

Ollama: Leverages highly optimized llama.cpp backend with efficient quantization. For Llama 2 7B (Q4), expect 20-30 tokens/second on CPU and 50-80 tokens/second on mid-range GPUs. No containerization overhead. For details on how Ollama manages concurrent inference, see our analysis on how Ollama handles parallel requests.

Docker (vLLM): Optimized for batch processing with continuous batching. Single requests may be slightly slower, but throughput excels under high concurrent load (100+ tokens/second per model with batching).

Docker (TGI): Similar to vLLM with excellent batching performance. Adds features like streaming and token-by-token generation.

Memory Usage

Docker Model Runner: Similar to Ollama with automatic model loading. GGUF Q4 models typically use 4-6GB RAM. Container overhead is minimal (tens of MB).

Ollama: Automatic memory management loads models on-demand and unloads when idle. A 7B Q4 model typically uses 4-6GB RAM. Most efficient for single-model scenarios.

Traditional Docker Solutions: Memory depends on the framework. vLLM pre-allocates GPU memory for optimal performance, while PyTorch-based containers may use more RAM for model weights and KV cache (8-14GB for 7B models).

Startup Time

Docker Model Runner: Container startup adds ~1 second, plus model loading (2-5 seconds). Total: 3-6 seconds for medium models.

Ollama: Near-instant startup with model loading taking 2-5 seconds for medium-sized models. Fastest cold start experience.

Traditional Docker: Container startup adds 1-3 seconds, plus model loading time. Pre-warming containers mitigates this in production deployments.

Docker Model Runner vs Ollama: Direct Comparison

With Docker’s official entry into the LLM runner space, the comparison becomes more interesting. Here’s how DMR and Ollama stack up head-to-head:

| Feature | Docker Model Runner | Ollama |

|---|---|---|

| Installation | Docker Desktop AI tab or docker-model-plugin |

Single command: curl | sh |

| Command Style | docker model pull/run/package |

ollama pull/run/list |

| Model Format | GGUF (OCI Artifacts) | GGUF (native) |

| Model Distribution | Docker Hub, OCI registries | Ollama registry |

| GPU Setup | Automatic (simpler than traditional Docker) | Automatic |

| API | OpenAI-compatible | OpenAI-compatible |

| Docker Integration | Native (is Docker) | Runs in Docker if needed |

| Compose Support | Native | Via Docker image |

| Learning Curve | Low (for Docker users) | Lowest (for everyone) |

| Ecosystem Partners | Google, Hugging Face, VMware | LangChain, CrewAI, Open WebUI |

| Best For | Docker-native workflows | Standalone simplicity |

Key Insight: DMR brings Docker workflows to LLM deployment, while Ollama remains framework-agnostic with simpler standalone operation. Your existing infrastructure matters more than technical differences.

Use Case Recommendations

Choose Docker Model Runner When

- Docker-first workflow: Your team already uses Docker extensively

- Unified tooling: You want one tool (Docker) for containers and models

- OCI artifact distribution: You need enterprise registry integration

- Testcontainers integration: You’re testing AI features in CI/CD

- Docker Hub preference: You want model distribution through familiar channels

Choose Ollama When

- Rapid prototyping: Quick experimentation with different models

- Framework agnostic: Not tied to Docker ecosystem

- Absolute simplicity: Minimal configuration and maintenance overhead

- Single-server deployments: Running on laptops, workstations, or single VMs

- Large model library: Access to extensive pre-configured model registry

Choose Third-Party Docker Solutions When

- Production deployments: Need for advanced orchestration and monitoring

- Multi-model serving: Running different frameworks (vLLM, TGI) simultaneously

- Kubernetes orchestration: Scaling across clusters with load balancing

- Custom frameworks: Using Ray Serve or proprietary inference engines

- Strict resource control: Enforcing granular CPU/GPU limits per model

Hybrid Approaches: Best of Both Worlds

You’re not limited to a single approach. Consider these hybrid strategies:

Option 1: Docker Model Runner + Traditional Containers

Use DMR for standard models and third-party containers for specialized frameworks:

# Pull a standard model with DMR

docker model pull ai/llama2

# Run vLLM for high-throughput scenarios

docker run --gpus all vllm/vllm-openai

Option 2: Ollama in Docker

Run Ollama inside Docker containers for orchestration capabilities:

docker run -d \

--name ollama \

--gpus all \

-v ollama:/root/.ollama \

-p 11434:11434 \

ollama/ollama

This provides:

- Ollama’s intuitive model management

- Docker’s orchestration and isolation capabilities

- Kubernetes deployment with standard manifests

Option 3: Mix and Match by Use Case

- Development: Ollama for rapid iteration

- Staging: Docker Model Runner for integration testing

- Production: vLLM/TGI in Kubernetes for scale

API Compatibility

All modern solutions converge on OpenAI-compatible APIs, simplifying integration:

Docker Model Runner API: OpenAI-compatible endpoints served automatically when running models. No additional configuration needed.

# Model runs with API automatically exposed

docker model run ai/llama2

# Use OpenAI-compatible endpoint

curl http://localhost:8080/v1/chat/completions -d '{

"model": "llama2",

"messages": [{"role": "user", "content": "Why is the sky blue?"}]

}'

Ollama API: OpenAI-compatible endpoints make it a drop-in replacement for applications using OpenAI’s SDK. Streaming is fully supported.

curl http://localhost:11434/api/generate -d '{

"model": "llama2",

"prompt": "Why is the sky blue?"

}'

Third-Party Docker APIs: vLLM and TGI offer OpenAI-compatible endpoints, while custom containers may implement proprietary APIs.

The convergence on OpenAI compatibility means you can switch between solutions with minimal code changes.

Resource Management

GPU Acceleration

Docker Model Runner: Native GPU support without complex nvidia-docker configuration. Automatically detects and uses available GPUs, significantly simplifying the Docker GPU experience compared to traditional containers.

# GPU acceleration works automatically

docker model run ai/llama2

Ollama: Automatic GPU detection on CUDA-capable NVIDIA GPUs. No configuration needed beyond driver installation.

Traditional Docker Containers: Requires nvidia-docker runtime and explicit GPU allocation:

docker run --gpus all my-llm-container

CPU Fallback

Both gracefully fall back to CPU inference when GPUs are unavailable, though performance decreases significantly (5-10x slower for large models). For insights into CPU-only performance on modern processors, read our test on how Ollama uses Intel CPU Performance and Efficient Cores.

Multi-GPU Support

Ollama: Supports tensor parallelism across multiple GPUs for large models.

Docker: Depends on the framework. vLLM and TGI support multi-GPU inference with proper configuration.

Community and Ecosystem

Docker Model Runner: Launched April 2025 with strong enterprise backing. Partnerships with Google, Hugging Face, and VMware Tanzu AI Solutions ensure broad model availability. Integration with Docker’s massive developer community (millions of users) provides instant ecosystem access. Still building community-specific resources as a new product.

Ollama: Rapidly growing community with 50K+ GitHub stars. Strong integration ecosystem (LangChain, LiteLLM, Open WebUI, CrewAI) and active Discord community. Extensive third-party tools and tutorials available. More mature documentation and community resources. For a comprehensive overview of available interfaces, see our guide to open-source chat UIs for local Ollama instances. As with any rapidly growing open-source project, it’s important to monitor the project’s direction - read our analysis of early signs of Ollama enshittification to understand potential concerns.

Third-Party Docker Solutions: vLLM and TGI have mature ecosystems with enterprise support. Extensive production case studies, optimization guides, and deployment patterns from Hugging Face and community contributors.

Cost Considerations

Docker Model Runner: Free with Docker Desktop (personal/educational) or Docker Engine. Docker Desktop requires subscription for larger organizations (250+ employees or $10M+ revenue). Models distributed through Docker Hub follow Docker’s registry pricing (free public repos, paid private repos).

Ollama: Completely free and open-source with no licensing costs regardless of organization size. Resource costs depend only on hardware.

Third-Party Docker Solutions: Free for open-source frameworks (vLLM, TGI). Potential costs for container orchestration platforms (ECS, GKE) and private registry storage.

Security Considerations

Docker Model Runner: Leverages Docker’s security model with container isolation. Models packaged as OCI Artifacts can be scanned and signed. Distribution through Docker Hub enables access control and vulnerability scanning for enterprise users.

Ollama: Runs as a local service with API exposed on localhost by default. Network exposure requires explicit configuration. Model registry is trusted (Ollama-curated), reducing supply chain risks.

Traditional Docker Solutions: Network isolation is built-in. Container security scanning (Snyk, Trivy) and image signing are standard practices in production environments.

All solutions require attention to:

- Model provenance: Untrusted models may contain malicious code or backdoors

- API authentication: Implement authentication/authorization in production deployments

- Rate limiting: Prevent abuse and resource exhaustion

- Network exposure: Ensure APIs are not inadvertently exposed to the internet

- Data privacy: Models process sensitive data; ensure compliance with data protection regulations

Migration Paths

From Ollama to Docker Model Runner

Docker Model Runner’s GGUF support makes migration simple:

- Enable Docker Model Runner in Docker Desktop or install

docker-model-plugin - Convert model references:

ollama run llama2→docker model pull ai/llama2anddocker model run ai/llama2 - Update API endpoints from

localhost:11434to DMR endpoint (typicallylocalhost:8080) - Both use OpenAI-compatible APIs, so application code requires minimal changes

From Docker Model Runner to Ollama

Moving to Ollama for simpler standalone operation:

- Install Ollama:

curl -fsSL https://ollama.ai/install.sh | sh - Pull equivalent models:

ollama pull llama2 - Update API endpoints to Ollama’s

localhost:11434 - Test with

ollama run llama2to verify functionality

From Traditional Docker Containers to DMR

Simplify your Docker LLM setup:

- Enable Docker Model Runner

- Replace custom Dockerfiles with

docker model pullcommands - Remove nvidia-docker configuration (DMR handles GPU automatically)

- Use

docker model runinstead of complexdocker runcommands

From Any Solution to Ollama in Docker

Best-of-both-worlds approach:

docker pull ollama/ollama- Run:

docker run -d --gpus all -v ollama:/root/.ollama -p 11434:11434 ollama/ollama - Use Ollama commands as usual:

docker exec -it ollama ollama pull llama2 - Gain Docker orchestration with Ollama simplicity

Monitoring and Observability

Ollama: Basic metrics via API (/api/tags, /api/ps). Third-party tools like Open WebUI provide dashboards.

Docker: Full integration with Prometheus, Grafana, ELK stack, and cloud monitoring services. Container metrics (CPU, memory, GPU) are readily available.

Conclusion

The landscape of local LLM deployment has evolved significantly with Docker’s introduction of Docker Model Runner (DMR) in 2025. The choice now depends on your specific requirements:

- For developers seeking Docker integration: DMR provides native Docker workflow integration with

docker modelcommands - For maximum simplicity: Ollama remains the easiest solution with its one-command model management

- For production and enterprise: Both DMR and third-party solutions (vLLM, TGI) in Docker offer orchestration, monitoring, and scalability

- For the best of both: Run Ollama in Docker containers to combine simplicity with production infrastructure

The introduction of DMR narrows the gap between Docker and Ollama in terms of ease of use. Ollama still wins on simplicity for quick prototyping, while DMR excels for teams already invested in Docker workflows. Both approaches are actively developed, production-ready, and the ecosystem is mature enough that switching between them is relatively painless.

Bottom Line: If you’re already using Docker extensively, DMR is the natural choice. If you want the absolute simplest experience regardless of infrastructure, choose Ollama.

Useful Links

Docker Model Runner

- Docker Model Runner Official Page

- Docker Model Runner Documentation

- Docker Model Runner Get Started Guide

- Docker Model Runner Announcement Blog

Ollama

Other Docker Solutions

Other Useful Articles

- Ollama cheatsheet

- Docker Cheatsheet

- How Ollama Handles Parallel Requests

- Test: How Ollama is using Intel CPU Performance and Efficient Cores

- Reranking text documents with Ollama and Qwen3 Embedding model - in Go

- Open-Source Chat UIs for LLMs on Local Ollama Instances

- First Signs of Ollama Enshittification