Farfalle vs Perplexica

Comparing two self-hosted AI search engines

Awesome food is the pleasure for your eyes too. But in this post we will compare two AI-based search systems, Farfalle and Perplexica.

By the way, this shape of pasta is called “farfalle” too.

But here I’m comparing only how these tho systems behave. Not the pasta shapes.

OK. Concentrate!

Please see also Perplexica installation and configuration - with ollama and Install and configure Ollama.

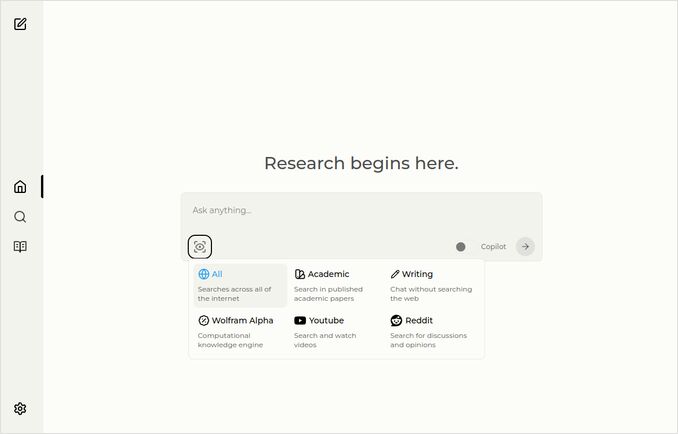

Perplexica

Here is the home page with sources select dropdown.

- Perplexica is implemented in

- TypeScript and React.Js (UI)

- TypeScript and Express.Js (Backend)

- also includes configuration for SearxNG metasearch engine

- Has a Copilot mode

- Has an ability to use any Ollama-hosted LLM as a chat or embeddings model

- Has three small internal embedding models

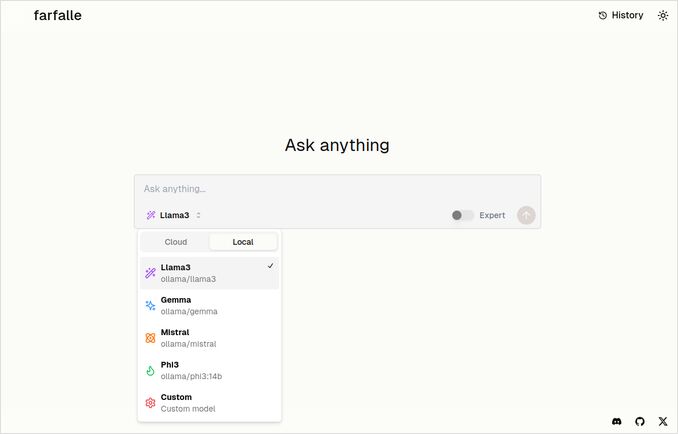

Farfalle

- Farfalle is implemented in

- TypeScript and React.Js (UI)

- Python Fastapi (Backend)

- also includes reference for SearxNG metasearch engine. No mych of configuration for this, pretty standard docker image.

- Has an Expert mode which should prepare several requests from the original and combine respinses. But this didn’t work in our test.

- Has an ability to use four Ollama-hosted LLMs as a chat model (llama3, mistral, gemma, phi3). That’s good set of options, but I would prefer it to be more flexible, and allow to use any model that Ollama serves.

- No options for embeddings model

Comparison done by Perplexica and Farfalle themselves

I’ve run the same request Compare farfalle and perplexica based on robustness, community, support and LLM supported

Both Perplexica and Farfalle were using llama3 model hosted on local ollama. Full name of the model is llama3 8b q4.

Don’t believe 100% what responses say, just see the text length, structure and overall response quality.

I would believe approx. 70%…

Perplexica comparison response

Comparison of Farfalle and Perplexica

Robustness

Farfalle: 8/10 - Farfalle is a popular AI search engine known for its reliability and ability to handle complex queries. It has a strong foundation in natural language processing (NLP) and machine learning (ML). While it may not be perfect, Farfalle’s robustness is well-established.

Perplexica: 9/10 - Perplexica is built on top of Meta AI’s Phi-3 model, which is designed for robustness. It has been tested to provide accurate answers even when faced with ambiguous or unclear queries. Its ability to rephrase questions and adapt to different contexts makes it a robust search engine.

Community

Farfalle: 7/10 - Farfalle has a dedicated community of users who appreciate its capabilities. While it may not have the same level of adoption as Perplexica, Farfalle’s user base is still significant.

Perplexica: 8.5/10 - Perplexica has gained widespread recognition for its innovative approach to search engines. Its community is growing rapidly, with many users appreciating its ability to provide accurate and relevant results.

Support

Farfalle: 6.5/10 - Farfalle provides basic support through FAQs, documentation, and forums. While it’s not as comprehensive as Perplexica’s support, Farfalle’s user base is generally self-sufficient.

Perplexica: 9/10 - Perplexica offers extensive support resources, including detailed documentation, FAQs, and community forums. Its developers are actively engaged with the community, ensuring that users receive timely and effective assistance.

LLMs Supported

Farfalle: No - Farfalle is a standalone AI search engine that doesn’t rely on large language models (LLMs) for its capabilities.

Perplexica: Yes - Perplexica is built on top of Meta AI’s Phi-3 model, which is an LLM. This allows Perplexica to leverage the power of deep learning for more accurate and relevant results.

Overall Score Farfalle: 22/30 Perplexica: 34.5/40

Based on these criteria, Perplexica appears to have a slight advantage over Farfalle in terms of robustness, community support, and LLM integration. However, both search engines have their strengths, and the choice ultimately depends on your specific needs and preferences.

Recommendation If you’re looking for a reliable AI search engine with strong NLP capabilities, Perplexica might be the better choice. If you prefer a more straightforward, no-frills approach to search, Farfalle could still provide excellent results.

Farfalle comparison response

Comparison of Farfalle and Perplexica

Farfalle and Perplexica are two AI-powered search engines that have gained attention in recent times. Here’s a comparison of these two projects based on robustness, community, support, and LLM (Large Language Model) support.

Robustness

- Farfalle is designed to be self-hosted with local or cloud LLMs, making it a more robust option for users who want control over their search engine.

- Perplexica, on the other hand, is an open-source alternative to Perplexity AI and is constantly being improved by its developers.

Community

- Perplexica has a dedicated community of developers and users who contribute to its development and provide support

- Farfalle’s community is smaller, but it still has a presence on GitHub

Support

- Perplexica offers regular updates and improvements, as well as a Discord channel for discussing matters outside of regular support

- Farfalle’s support is limited to GitHub issues and pull requests

LLM Support Both Farfalle and Perplexica support LLMs. Perplexica uses Ollama, a local LLM, while Farfalle supports both local and cloud LLMs.

In summary, Farfalle is more robust due to its self-hosted design, while Perplexica has a stronger community and better support. Both projects support LLMs, but Perplexica uses Ollama specifically.

Conclusion

What is the difference between Perplexica and Farfalle?

The answer:

- Putting aside some factual errors, both systems think Perplexica is better then Farfalle, which I’d like to agree with.

- I really like Farfalle UI, though they are pretty close

- I like the idea of expert mode of Farfalle though it didn’t work on my environment

- I came across 3 bugs when running Farfalle. Perplexica code is more polished

- Once configured, perplexica did run without any errors

- Perplexica allows to use any Ollama-hosted model. And Farfalle allows to use just Gemma, Llama3, Mistral and Phi3 (prodefined versions, which are a little outdated).

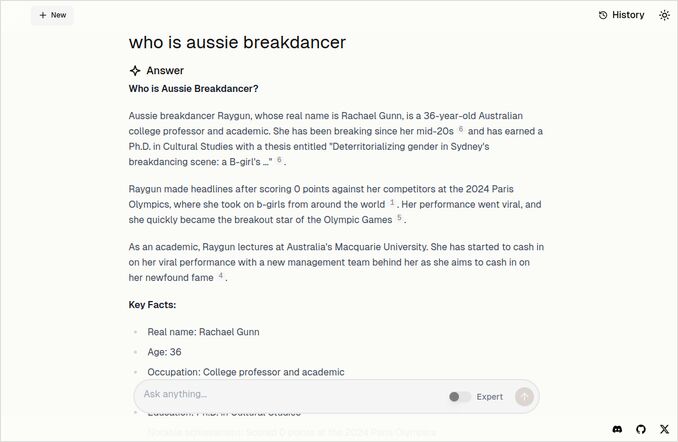

- I like Farfalle responses more. Look at the Farfalle image below. Right to the point without those “Accrording to the context provided”…

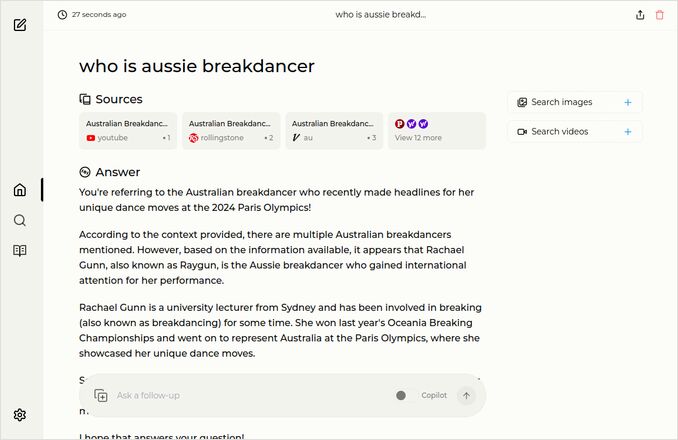

More examples

These show responses for the same question about aussie breakdancer. You know, the one with PhD that got 0 (Zero) points and probably got the breakdancing removed from the olympics programme.

Farfalle response

Perplexica response

If you read up (or down) will this last paragraph, Thank you for having the same interests as I do. It is really exciting times. Have a great day!

Useful links

- Search vs Deepsearch vs Deep Research

- Test: How Ollama is using Intel CPU Performance and Efficient Cores

- How Ollama Handles Parallel Requests

- Self-hosting Perplexica - with Ollama

- Comparing LLM Summarising Abilities

- Writing effective prompts for LLMs

- Testing logical fallacy detection by new LLMs: gemma2, qwen2 and mistral Nemo

- Ollama cheatsheet

- Markdown Cheatsheet

- Cloud LLM Providers

- Qwen3 Embedding & Reranker Models on Ollama: State-of-the-Art Performance